Deploy XtremeCloud SSO to an Istio Service Mesh

Introduction

Istio is an open platform for providing a uniform way to integrate microservices, manage traffic flow across microservices, enforce policies and aggregate telemetry data. Istio’s control plane provides an abstraction layer over the underlying cluster management platform, such as Kubernetes.

Istio is composed of these components:

-

Envoy - Sidecar proxies per microservice to handle ingress/egress traffic between services in the cluster and from a service to external services. The proxies form a secure microservice mesh providing a rich set of functions like discovery, rich layer-7 routing, circuit breakers, policy enforcement and telemetry recording/reporting functions.

Note: The service mesh is not an overlay network. It simplifies and enhances how microservices in an application talk to each other over the network provided by the underlying platform.

-

Istiod - The Istio control plane. It provides service discovery, configuration and certificate management. It consists of the following sub-components:

-

Pilot - Responsible for configuring the proxies at runtime.

-

Citadel - Responsible for certificate issuance and rotation.

-

Galley - Responsible for validating, ingesting, aggregating, transforming and distributing config within Istio.

-

-

Operator - The component provides user-friendly options to operate the Istio service mesh.

Implementation Considerations

Using NGINX as an Ingress Proxy in the Istio Service Mesh

Outside of a service mesh, XtremeCloud SSO containers are typically front-ended by an NGINX Kubernetes Ingress Controller (KIC). Although the desired functionality is in place, end-to-end encryption to the Single Sign-On (SSO) services is not available since SSL/TLS is terminated at the NGINX-based KIC. Some organizations find this acceptable, depending on the network isolation of their Kubernertes clusters.

For those organizations that need end-to-end encryption all the way to the application services, an alternative approach is needed. Without Istio deployed, it is necessary to enable SSL/TLS in the XtremeCloud SSO Wildfly-based application server. Although this is a supported configuration by Eupraxia Labs, this is generally not advised. This configuration adds complexity to the container’s configuration and requires the XtremeCloud SSO container to perform cryptographic operations that typically consumes 20% of the container’s compute power.

Using Istio, not only is the payload encrypted in flight, the endpoint itself is protected since only pre-authorized clients via mutual TLS (mTLS) can connect to the SSO service. This is consistent with an Istio-based Zero Trust Architecture (ZTA) that we feel is essential in a CyberSAAFE configuration.

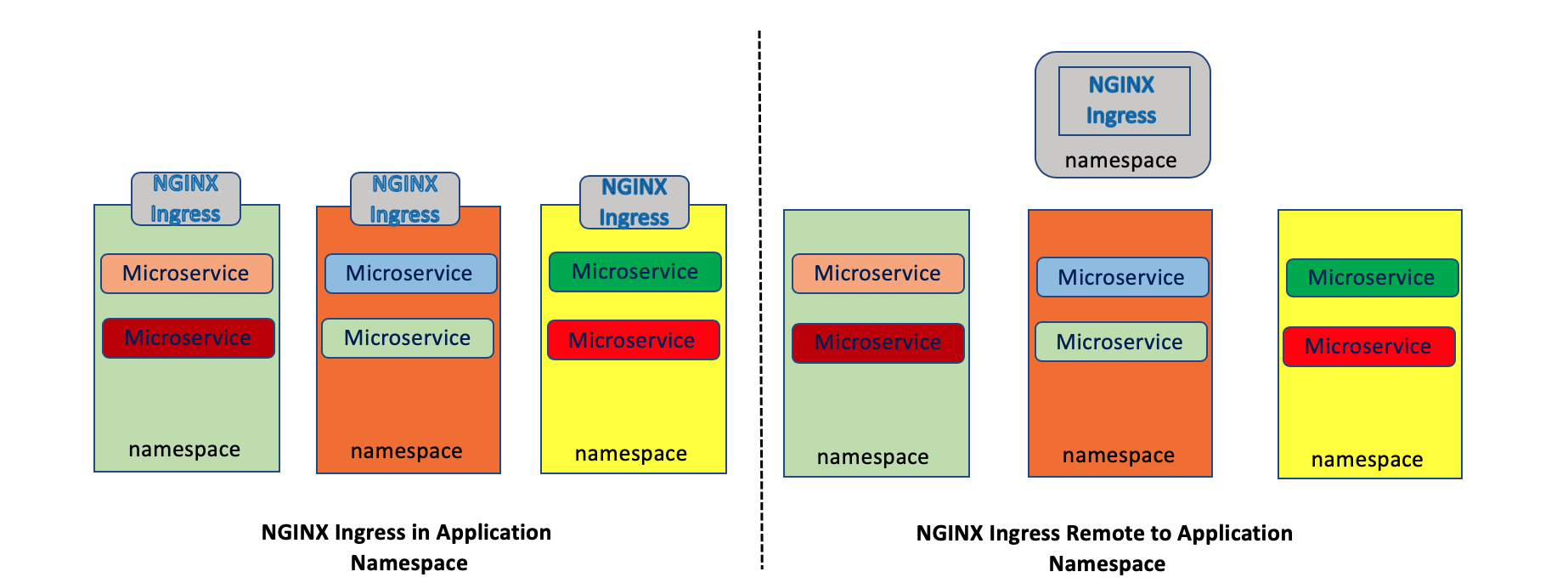

This brings us to the idea of using NGINX as an ingress proxy with the Istio Service Mesh. There are three (3) approaches to deploy an ingress proxy, such as NGINX, within an Istio Service Mesh:

- Run dedicated ingress proxies within each team or microservice (Dedicated Model)

- A set of ingress proxies shared amongst many teams or microservices (Shared Model)

- A combination, where a team has a dedicated ingress that spans multiple namespaces they own, or the organization has a combination of teams with dedicated ingresses and teams that may use a shared ingress, or a combination of all the above (Hybrid Model). There is a lot of flexibility here.

In the diagram below, the Dedicated Model (on the left) and the Shared Model are illustrated.

We’re going to focus on the Dedicated Model since a lot of microservices in a namespace are likely to be handled by NGINX within that same namespace. The complexity of that alone is a lot for teams to manage and centralization of a single large (or even multiple) NGINX proxy moves us away from some of the benefits of microservices.

Let’s install an NGINX ingress proxy into an Istio Service Mesh. This will be a manual installation to illustrate and explain the steps. However, any organization with an XtremeCloud SSO subscription will be provided with Helm charts and a Codefresh CI/CD pipeline for an automated installation experience. This installation will be on Minikube to show the flexibility of the implementation to run even on the desktop.

First of all, download an Istio version of choice. Please refer to our Certification Matrix during your installation of XtremeCloud SSO. This will be version 1.6.5 of Istio on a Kubernetes 1.18 version.

curl -L https://istio.io/downloadIstio | ISTIO_VERSION=1.6.5 sh -

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 107 100 107 0 0 100 0 0:00:01 0:00:01 --:--:-- 100

100 3896 100 3896 0 0 2014 0 0:00:01 0:00:01 --:--:-- 0

Downloading istio-1.6.5 from https://github.com/istio/istio/releases/download/1.6.5/istio-1.6.5-osx.tar.gz ...

Istio 1.6.5 Download Complete!

Istio has been successfully downloaded into the istio-1.6.5 folder on your system.

Next Steps:

See https://istio.io/docs/setup/kubernetes/install/ to add Istio to your Kubernetes cluster.

To configure the istioctl client tool for your workstation,

add the /Users/davidjbrewer/apps/test-stuff/istio-1.6.5/bin directory to your environment path variable with:

export PATH="$PATH:/Users/davidjbrewer/apps/istio-1.6.5/bin"

Begin the Istio pre-installation verification check by running:

istioctl verify-install

Need more information? Visit https://istio.io/docs/setup/kubernetes/install/

Move to the Istio package directory.

$ cd istio-1.6.5

Add the istioctl client to your path.

$ export PATH=$PWD/bin:$PATH

Deploy Istio (with global mTLS):

$ istioctl manifest apply \

--set values.global.mtls.enabled=true \

--set values.global.controlPlaneSecurityEnabled=true

We’re going to create a new namespace and label it for sidecar proxies:

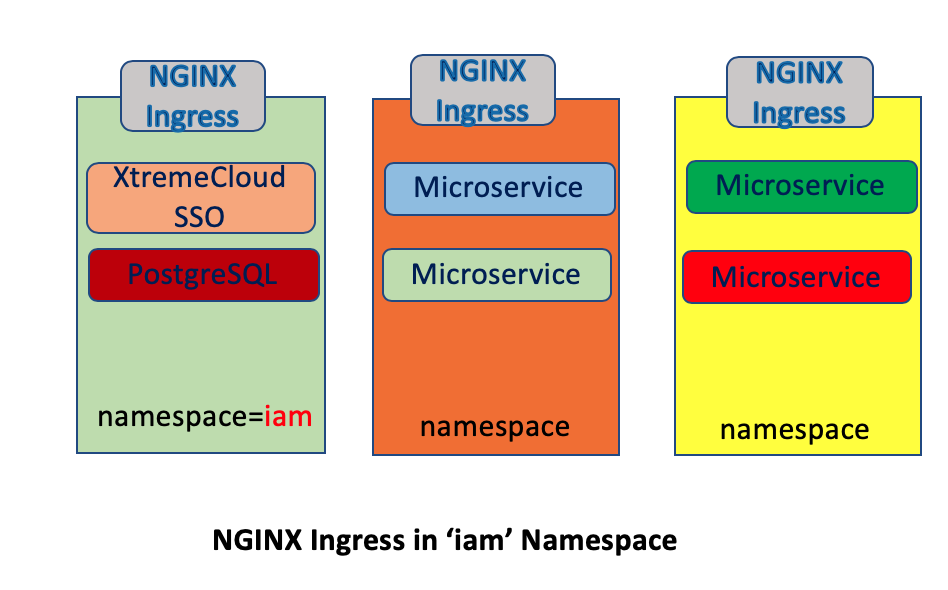

$ kubectl create ns iam

$ kubectl label namespace iam istio-injection=enabled

We can confirm that namespace ‘iam’ is injection-enabled:

Davids-MacBook-Pro:xtremecloud-sso-minikube davidjbrewer$ kubectl get namespace -L istio-injection

NAME STATUS AGE ISTIO-INJECTION

default Active 4d21h enabled

iam Active 3d2h enabled

ingress Active 4d21h enabled

istio-system Active 4d21h disabled

kube-node-lease Active 4d21h

kube-public Active 4d21h

kube-system Active 4d21h

kubernetes-dashboard Active 4d21h

Another quick check is to describe the namespace:

Davids-MacBook-Pro:xtremecloud-sso-minikube davidjbrewer$ kubectl describe namespace iam

Name: iam

Labels: istio-injection=enabled

Annotations: <none>

Status: Active

No resource quota.

No LimitRange resource.

Deploy the NGINX Ingress Controller and configurations:

export KUBE_API_SERVER_IP=$(kubectl get svc kubernetes -n default -o jsonpath='{.spec.clusterIP}')/32

sed "s#__KUBE_API_SERVER_IP__#${KUBE_API_SERVER_IP}#" nginx-in-istio-iam-ns.yaml | kubectl apply -f -

This is the NGINX Controller configuration for deployment into the ‘iam’ namespace:

# nginx-in-istio-iam-ns.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

namespace: iam

annotations:

certmanager.k8s.io/cluster-issuer: letsencrypt-prod

ingress.kubernetes.io/ssl-passthrough: "false"

kubernetes.io/ingress.class: nginx

kubernetes.io/load-balance: ip_hash # ip_hash provides stickiness to the same XtremeCloud SSO pod

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/affinity: cookie

nginx.ingress.kubernetes.io/session-cookie-expires: "172800"

nginx.ingress.kubernetes.io/session-cookie-max-age: "172800"

nginx.ingress.kubernetes.io/session-cookie-name: nginx-route

nginx.ingress.kubernetes.io/service-upstream: "true"

nginx.ingress.kubernetes.io/upstream-vhost: sso.iam.svc.cluster.local

nginx.ingress.kubernetes.io/server-snippet: |

proxy_ssl_name sso-dev.eupraxialabs.com;

proxy_ssl_server_name on;

name: nginx-ingress

spec:

rules:

- host: sso-dev.eupraxialabs.com

http:

paths:

- backend:

serviceName: sso

servicePort: 8080

path: /

tls:

- hosts:

- sso-dev.eupraxialabs.com

secretName: sso-dev-eupraxialabs-com

---

# Deployment: nginx-ingress-controller

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-ingress-controller

namespace: iam

spec:

replicas: 1

selector:

matchLabels:

app: ingress-nginx

template:

metadata:

labels:

app: ingress-nginx

annotations:

prometheus.io/port: '10254'

prometheus.io/scrape: 'true'

# Do not redirect inbound traffic to Envoy.

traffic.sidecar.istio.io/includeInboundPorts: ""

traffic.sidecar.istio.io/excludeInboundPorts: "80,443"

# Exclude outbound traffic to kubernetes master from redirection.

traffic.sidecar.istio.io/excludeOutboundIPRanges: __KUBE_API_SERVER_IP__

sidecar.istio.io/inject: 'true'

spec:

serviceAccountName: nginx-ingress-serviceaccount

containers:

- name: nginx

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.12.0

securityContext:

runAsUser: 0

args:

- /nginx-ingress-controller

- --default-backend-service=$(POD_NAMESPACE)/nginx-default-http-backend

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/nginx-tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/nginx-udp-services

- --annotations-prefix=nginx.ingress.kubernetes.io

- --v=10

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

- name: https

containerPort: 443

livenessProbe:

failureThreshold: 8

initialDelaySeconds: 15

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

httpGet:

path: /healthz

port: 10254

scheme: HTTP

readinessProbe:

failureThreshold: 8

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

httpGet:

path: /healthz

port: 10254

scheme: HTTP

---

# Service: ingress-nginx

apiVersion: v1

kind: Service

metadata:

name: ingress-nginx

namespace: iam

labels:

app: ingress-nginx

spec:

type: LoadBalancer

selector:

app: ingress-nginx

ports:

- name: http

port: 80

targetPort: http

- name: https

port: 443

targetPort: https

---

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-configuration

namespace: iam

labels:

app: ingress-nginx

data:

ssl-redirect: "true"

use-forwarded-headers: "true"

---

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-tcp-services

namespace: iam

labels:

app: ingress-nginx

---

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-udp-services

namespace: iam

labels:

app: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: iam

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

namespace: iam

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: iam

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "<election-id>-<ingress-class>"

# Here: "<ingress-controller-leader>-<nginx>"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: iam

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

namespace: iam

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: iam

---

# Deployment: nginx-default-http-backend

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-default-http-backend

namespace: iam

labels:

app: nginx-default-http-backend

spec:

replicas: 1

selector:

matchLabels:

app: nginx-default-http-backend

template:

metadata:

labels:

app: nginx-default-http-backend

# rewrite kubelet's probe request to pilot agent to prevent health check failure under mtls

annotations:

sidecar.istio.io/rewriteAppHTTPProbers: "true"

spec:

terminationGracePeriodSeconds: 60

containers:

- name: backend

# Any image is permissible as long as:

# 1. It serves a 404 page at /

# 2. It serves 200 on a /healthz endpoint

image: gcr.io/google_containers/defaultbackend:1.4

securityContext:

runAsUser: 0

ports:

- name: http

containerPort: 8080

livenessProbe:

httpGet:

path: /healthz

port: 8080

scheme: HTTP

initialDelaySeconds: 30

timeoutSeconds: 5

resources:

limits:

cpu: 10m

memory: 20Mi

requests:

cpu: 10m

memory: 20Mi

---

# Service: nginx-default-http-backend

apiVersion: v1

kind: Service

metadata:

name: nginx-default-http-backend

namespace: iam

labels:

app: nginx-default-http-backend

spec:

ports:

- name: http

port: 80

targetPort: http

selector:

app: nginx-default-http-backend

---

We’re not going to cover the details of the Cert Manager (ACME) installation, but we’ll show you some deployment details:

Davids-MacBook-Pro:xtremecloud-sso-minikube davidjbrewer$ helm ls

NAME REVISION UPDATED STATUS CHART APP VERSION NAMESPACE

cert-manager 1 Mon Aug 10 10:19:40 2020 DEPLOYED cert-manager-v0.16.0 v0.16.0 cert-manager

Davids-MacBook-Pro:xtremecloud-sso-minikube davidjbrewer$ kubectl get certs

NAME READY SECRET AGE

sso-dev.eupraxialabs-com True sso-dev-eupraxialabs-com 30h

Davids-MacBook-Pro:xtremecloud-sso-minikube davidjbrewer$ kubectl get clusterissuer

NAME READY AGE

letsencrypt-prod True 30h

Davids-MacBook-Pro:xtremecloud-sso-minikube davidjbrewer$ kubectl get pods -n cert-manager

NAME READY STATUS RESTARTS AGE

cert-manager-c456f8b56-pf7f6 1/1 Running 5 30h

cert-manager-cainjector-6b4f5b9c99-jlrps 1/1 Running 10 30h

cert-manager-webhook-5cfd5478b-srt2v 1/1 Running 7 30h

With Let’s Encrypt (details not covered here) installed, let’s ‘exec’ into the sidecar of the NGINX Controller and connect to NGINX and verify the certificate:

Davids-MacBook-Pro:xtremecloud-sso-minikube davidjbrewer$ kubectl exec -it $(kubectl get pod -l app=ingress-nginx -o jsonpath={.items..metadata.name}) -c istio-proxy -- curl -v --resolve sso-dev.eupraxialabs.com:443:127.0.0.1 https://sso-dev.eupraxialabs.com

* Added sso-dev.eupraxialabs.com:443:127.0.0.1 to DNS cache

* Rebuilt URL to: https://sso-dev.eupraxialabs.com/

* Hostname sso-dev.eupraxialabs.com was found in DNS cache

* Trying 127.0.0.1...

* TCP_NODELAY set

* Connected to sso-dev.eupraxialabs.com (127.0.0.1) port 443 (#0)

* ALPN, offering h2

* ALPN, offering http/1.1

* successfully set certificate verify locations:

* CAfile: /etc/ssl/certs/ca-certificates.crt

CApath: /etc/ssl/certs

* TLSv1.3 (OUT), TLS handshake, Client hello (1):

* TLSv1.3 (IN), TLS handshake, Server hello (2):

* TLSv1.2 (IN), TLS handshake, Certificate (11):

* TLSv1.2 (IN), TLS handshake, Server key exchange (12):

* TLSv1.2 (IN), TLS handshake, Server finished (14):

* TLSv1.2 (OUT), TLS handshake, Client key exchange (16):

* TLSv1.2 (OUT), TLS change cipher, Client hello (1):

* TLSv1.2 (OUT), TLS handshake, Finished (20):

* TLSv1.2 (IN), TLS handshake, Finished (20):

* SSL connection using TLSv1.2 / ECDHE-RSA-AES256-GCM-SHA384

* ALPN, server accepted to use h2

* Server certificate:

* subject: CN=sso-dev.eupraxialabs.com

* start date: Aug 10 14:25:20 2020 GMT

* expire date: Nov 8 14:25:20 2020 GMT

* subjectAltName: host "sso-dev.eupraxialabs.com" matched cert's "sso-dev.eupraxialabs.com"

* issuer: C=US; O=Let's Encrypt; CN=Let's Encrypt Authority X3

* SSL certificate verify ok.

* Using HTTP2, server supports multi-use

* Connection state changed (HTTP/2 confirmed)

* Copying HTTP/2 data in stream buffer to connection buffer after upgrade: len=0

* Using Stream ID: 1 (easy handle 0x5555e9bce580)

> GET / HTTP/2

> Host: sso-dev.eupraxialabs.com

> User-Agent: curl/7.58.0

> Accept: */*

>

* Connection state changed (MAX_CONCURRENT_STREAMS updated)!

< HTTP/2 200

< server: nginx/1.13.9

< date: Tue, 11 Aug 2020 20:54:49 GMT

< content-type: text/html

< content-length: 1087

< vary: Accept-Encoding

< last-modified: Fri, 01 May 2020 15:08:38 GMT

< accept-ranges: bytes

< x-envoy-upstream-service-time: 2

< strict-transport-security: max-age=15724800; includeSubDomains;

<

<!--

~ Copyright 2016 Red Hat, Inc. and/or its affiliates

~ and other contributors as indicated by the @author tags.

~

~ Licensed under the Apache License, Version 2.0 (the "License");

~ you may not use this file except in compliance with the License.

~ You may obtain a copy of the License at

~

~ http://www.apache.org/licenses/LICENSE-2.0

~

~ Unless required by applicable law or agreed to in writing, software

~ distributed under the License is distributed on an "AS IS" BASIS,

~ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

~ See the License for the specific language governing permissions and

~ limitations under the License.

-->

<!DOCTYPE html PUBLIC "-//W3C//DTD HTML 4.01 Transitional//EN">

<html>

<head>

<meta http-equiv="refresh" content="0; url=/auth/" />

<meta name="robots" content="noindex, nofollow">

<script type="text/javascript">

window.location.href = "/auth/"

</script>

</head>

<body>

If you are not redirected automatically, follow this <a href='/auth'>link</a>.

</body>

</html>

* Connection #0 to host sso-dev.eupraxialabs.com left intact

As expected, we have a valid LetsEncrypt certificate applied to the NGINX Kubernetes Ingress Controller (KIC) and we connected to the SSO pod.

A quick check inside the NGINX Controller container shows the valid certificates:

Davids-MacBook-Pro:xtremecloud-sso-minikube davidjbrewer$ k exec -it nginx-ingress-controller-6c6fdf5598-jz89l -c nginx -- bash

root@nginx-ingress-controller-6c6fdf5598-jz89l:/# ls -lsa

.dockerenv boot/ etc/ lib/ mnt/ proc/ sbin/ tmp/

Dockerfile build.sh home/ lib64/ nginx-ingress-controller root/ srv/ usr/

bin/ dev/ ingress-controller/ media/ opt/ run/ sys/ var/

root@nginx-ingress-controller-6c6fdf5598-jz89l:/# ls -lsa ingress-controller/

clean-nginx-conf.sh ssl/

root@nginx-ingress-controller-6c6fdf5598-jz89l:/# ls -lsa ingress-controller/ssl/

default-fake-certificate.pem iam-sso-dev-eupraxialabs-com-full-chain.pem iam-sso-dev-eupraxialabs-com.pem

root@nginx-ingress-controller-6c6fdf5598-jz89l:/# ls -lsa ingress-controller/ssl/

total 28

4 drw-r-xr-x 2 root root 4096 Aug 5 16:54 .

4 drwxrwxr-x 1 root root 4096 Aug 5 16:53 ..

4 -rw------- 1 root root 2933 Aug 5 16:53 default-fake-certificate.pem

8 -rw-r--r-- 1 root root 4782 Aug 5 16:54 iam-sso-dev-eupraxialabs-com-full-chain.pem

8 -rw------- 1 root root 5258 Aug 5 16:53 iam-sso-dev-eupraxialabs-com.pem

Note the presence of the default-fake certificate. That fake certificate is defined by the NGINX Ingress Controller for all Ingress resources that do not define their own. If a certificate is not provided, the fake is used. An Istio Ingress Gateway does not have a fake certificate for use. The certificate must be defined in every Gateway resource.

Let’s look at the pods running in the istio-system namespace:

Davids-MacBook-Pro:xtremecloud-sso-minikube davidjbrewer$ kubectl get po -n istio-system

NAME READY STATUS RESTARTS AGE

grafana-b54bb57b9-z7dpt 1/1 Running 3 2d4h

istio-egressgateway-7486cf8c97-pnb54 1/1 Running 3 2d4h

istio-ingressgateway-6bcb9d7bbf-qkkgt 0/1 Running 4 2d4h

istio-tracing-9dd6c4f7c-bbjs2 1/1 Running 6 2d4h

istiod-788f76c8fc-hhk4b 1/1 Running 4 2d4h

kiali-d45468dc4-2s2tr 1/1 Running 3 2d4h

prometheus-6477cfb669-jqjvp 2/2 Running 11 2d4h

Although the *istio-ingressgateway is not running, it does not affect the NGINX Ingress Contoller’s functions. Remember, we have annotations in our NGINX KIC deployment to not use the Istio Envoy Proxy for ingress into the service mesh:

traffic.sidecar.istio.io/includeInboundPorts: ""

traffic.sidecar.istio.io/excludeInboundPorts: "80,443"

Davids-MacBook-Pro:xtremecloud-sso-minikube davidjbrewer$ kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx LoadBalancer 10.97.201.174 10.97.201.174 80:31767/TCP,443:32396/TCP 30h

nginx-default-http-backend ClusterIP 10.96.122.98 <none> 80/TCP 30h

postgres ClusterIP 10.110.39.39 <none> 5432/TCP 2d4h

sso ClusterIP 10.109.50.231 <none> 8080/TCP 30h

Installation Summary

Let’s bring up K9s and look at the installation results.

Additional Information

Let’s get a status on the Istio Service Mesh:

Davids-MacBook-Pro:xtremecloud-sso-minikube davidjbrewer$ istioctl proxy-status

NAME CDS LDS EDS RDS PILOT VERSION

httpbin-66cdbdb6c5-s6zl7.default SYNCED SYNCED SYNCED SYNCED istiod-59b9dd8b9c-7tb97 1.6.5

istio-ingressgateway-6f9df9b8-rc46f.istio-system SYNCED SYNCED SYNCED SYNCED istiod-59b9dd8b9c-7tb97 1.6.5

nginx-default-http-backend-7bc59fd8f-qwqvd.ingress SYNCED SYNCED SYNCED SYNCED istiod-59b9dd8b9c-7tb97 1.6.5

nginx-default-http-backend-7bc59fd8f-skln7.iam SYNCED SYNCED SYNCED SYNCED istiod-59b9dd8b9c-7tb97 1.6.5

nginx-ingress-controller-6c6fdf5598-d72hw.ingress SYNCED SYNCED SYNCED SYNCED istiod-59b9dd8b9c-7tb97 1.6.5

nginx-ingress-controller-6c6fdf5598-gh4wc.default SYNCED SYNCED SYNCED SYNCED istiod-59b9dd8b9c-7tb97 1.6.5

nginx-ingress-controller-6c6fdf5598-jz89l.iam SYNCED SYNCED SYNCED SYNCED istiod-59b9dd8b9c-7tb97 1.6.5

postgres-6cbbc9d488-7wssm.iam SYNCED SYNCED SYNCED SYNCED istiod-59b9dd8b9c-7tb97 1.6.5

prometheus-6477cfb669-6h2xb.istio-system SYNCED SYNCED SYNCED SYNCED istiod-59b9dd8b9c-7tb97 1.6.5

xtremecloud-sso-minikube-0.iam SYNCED SYNCED SYNCED SYNCED istiod-59b9dd8b9c-7tb97 1.6.5

If a proxy is missing from this list it means that it is not currently connected to a Istiod instance so will not be receiving any configuration.

- SYNCED means that Envoy has acknowledged the last configuration Istiod has sent to it.

- NOT SENT means that Istiod hasn’t sent anything to Envoy. This usually is because Istiod has nothing to send.

- STALE means that Istiod has sent an update to Envoy but has not received an acknowledgement. This usually indicates a networking issue between Envoy and Istiod or a bug with Istio itself.

So, we’re healthy.

Advanced Debugging Techniques

If you are experiencing issues with getting Cert-Manager (ACME) issuing a certificate due to a DNS-01 challenge, check the following.

From a dnsutils pod, let’s look up a domain’s Start of Authority (SOA) record:

Davids-MacBook-Pro:xtremecloud-sso-minikube davidjbrewer$ kubectl exec -i -t -n default dnsutils -- nslookup -type=soa eupraxialabs.com

Server: 10.96.0.10

Address: 10.96.0.10#53

cluster.local

origin = ns.dns.cluster.local

mail addr = hostmaster.cluster.local

serial = 1597589609

refresh = 7200

retry = 1800

expire = 86400

minimum = 30

That is not the expected result. Cert-Manager expects to see the following, since Cloudflare is providing the DNS services:

Let’s see if cert-manager added the TXT record to the DNS provider:

Davids-MacBook-Pro:xtremecloud-sso-minikube davidjbrewer$ dig _acme-challenge.eupraxialabs.com TXT

; <<>> DiG 9.10.6 <<>> _acme-challenge.eupraxialabs.com TXT

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NXDOMAIN, id: 34862

;; flags: qr rd ra; QUERY: 1, ANSWER: 0, AUTHORITY: 1, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;_acme-challenge.eupraxialabs.com. IN TXT

;; AUTHORITY SECTION:

eupraxialabs.com. 900 IN SOA gabe.ns.cloudflare.com. dns.cloudflare.com. 2034925929 10000 2400 604800 3600

;; Query time: 44 msec

;; SERVER: 192.168.1.1#53(192.168.1.1)

;; WHEN: Sun Aug 16 13:09:54 CDT 2020

;; MSG SIZE rcvd: 120

That is the expected result. Another easy way to check, outside of Kubernetes, is to do a nslookup type query of SOA on the domain of interest.

Davids-MacBook-Pro:xtremecloud-sso-minikube davidjbrewer$ nslookup -type=soa eupraxialabs.com

Server: 192.168.1.1

Address: 192.168.1.1#53

Non-authoritative answer:

eupraxialabs.com

origin = gabe.ns.cloudflare.com

mail addr = dns.cloudflare.com

serial = 2034925929

refresh = 10000

retry = 2400

expire = 604800

minimum = 3600

Authoritative answers can be found from:

We’re going to edit the configMap for CoreDNS and set up an external resolver to a Google DNS server:

# Please edit the object below. Lines beginning with a '#' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

#

apiVersion: v1

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

ttl 30

}

prometheus :9153

forward . 8.8.8.8 <<<<<<<<<<<<<<<<<<<<<<<<************* changed from /etc/resolv.conf

cache 30

loop

reload

loadbalance

}

kind: ConfigMap

metadata:

creationTimestamp: "2020-08-14T19:46:54Z"

managedFields:

- apiVersion: v1

fieldsType: FieldsV1

fieldsV1:

f:data: {}

manager: kubeadm

operation: Update

time: "2020-08-14T20:34:23Z"

- apiVersion: v1

fieldsType: FieldsV1

fieldsV1:

f:data:

f:Corefile: {}

manager: kubectl

operation: Update

time: "2020-08-16T18:44:33Z"

name: coredns

namespace: kube-system

resourceVersion: "49014"

selfLink: /api/v1/namespaces/kube-system/configmaps/coredns

uid: e4fae5b4-baa6-47a0-aa71-68cdb7dd5e33

Delete the CoreDNS pod and try it again:

Davids-MacBook-Pro:xtremecloud-sso-minikube davidjbrewer$ kubectl exec -i -t -n default dnsutils -- nslookup -type=soa eupraxialabs.com

Server: 10.96.0.10

Address: 10.96.0.10#53

cluster.local

origin = ns.dns.cluster.local

mail addr = hostmaster.cluster.local

serial = 1597611039

refresh = 7200

retry = 1800

expire = 86400

minimum = 30

It provides the same answer, so it looks inconclusive. However, cert-manager was able to get the SOA and the certificate was issued as expected. Again, this is being done in MiniKube and needs further investigation.

Logging Levels for an Istio Proxy

Let’s take a look at logging levels within an istio-proxy.

As we will see, it is very easy to change the logging level of an istio-proxy:

$ kubectl exec -it xtremecloud-sso-minikube-0 -c istio-proxy -- sh -c 'curl -k -X POST localhost:15000/logging?level=info'

active loggers:

admin: info

aws: info

assert: info

backtrace: info

cache_filter: info

client: info

config: info

connection: info

conn_handler: info

decompression: info

dubbo: info

file: info

filter: info

forward_proxy: info

grpc: info

hc: info

health_checker: info

http: info

http2: info

hystrix: info

init: info

io: info

jwt: info

kafka: info

lua: info

main: info

misc: info

mongo: info

quic: info

quic_stream: info

pool: info

rbac: info

redis: info

router: info

runtime: info

stats: info

secret: info

tap: info

testing: info

thrift: info

tracing: info

upstream: info

udp: info

wasm: info

We’re going to put our sidecar that is in the pod with XtremeCloud SSO into ‘debug’ mode:

$ kubectl exec -it xtremecloud-sso-minikube-0 -c istio-proxy -- sh -c 'curl -k -X POST localhost:15000/logging?level=debug'

active loggers:

admin: debug

aws: debug

assert: debug

backtrace: debug

cache_filter: debug

client: debug

config: debug

connection: debug

conn_handler: debug

decompression: debug

dubbo: debug

file: debug

filter: debug

forward_proxy: debug

grpc: debug

hc: debug

health_checker: debug

http: debug

http2: debug

hystrix: debug

init: debug

io: debug

jwt: debug

kafka: debug

lua: debug

main: debug

misc: debug

mongo: debug

quic: debug

quic_stream: debug

pool: debug

rbac: debug

redis: debug

router: debug

runtime: debug

stats: debug

secret: debug

tap: debug

testing: debug

thrift: debug

tracing: debug

upstream: debug

udp: debug

wasm: debug

As we can see, ‘debug’ entries are now appearing in the XtremeCloud SSO sidecar logs:

$ kubectl logs -f xtremecloud-sso-minikube-0 -c istio-proxy

2020-08-02T15:16:55.629304Z debug envoy http [external/envoy/source/common/http/conn_manager_impl.cc:1337] [C14064][S17228183679396321216] request end stream

2020-08-02T15:16:55.629333Z debug envoy router [external/envoy/source/common/router/router.cc:477] [C14064][S17228183679396321216] cluster 'agent' match for URL '/healthz/ready'

2020-08-02T15:16:55.629362Z debug envoy router [external/envoy/source/common/router/router.cc:634] [C14064][S17228183679396321216] router decoding headers:

':authority', '172.17.0.12:15021'

':path', '/healthz/ready'

':method', 'GET'

':scheme', 'http'

'user-agent', 'kube-probe/1.18'

'accept-encoding', 'gzip'

'x-forwarded-proto', 'http'

'x-request-id', '5c46e1fd-19fd-48f0-9814-64576b5f1467'

'x-envoy-expected-rq-timeout-ms', '15000'

2020-08-02T15:16:55.629399Z debug envoy pool [external/envoy/source/common/http/conn_pool_base.cc:118] [C7] using existing connection

2020-08-02T15:16:55.629409Z debug envoy pool [external/envoy/source/common/http/conn_pool_base.cc:68] [C7] creating stream

2020-08-02T15:16:55.629422Z debug envoy router [external/envoy/source/common/router/upstream_request.cc:317] [C14064][S17228183679396321216] pool ready

2020-08-02T15:16:55.630491Z debug envoy http [external/envoy/source/common/http/conn_manager_impl.cc:268] [C10704] new stream

2020-08-02T15:16:55.630976Z debug envoy http [external/envoy/source/common/http/conn_manager_impl.cc:782] [C10704][S1098415029100968055] request headers complete (end_stream=true):

':authority', '127.0.0.1:15000'

':path', '/stats?usedonly&filter=^(server.state|listener_manager.workers_started)'

':method', 'GET'

'user-agent', 'Go-http-client/1.1'

'accept-encoding', 'gzip'

2020-08-02T15:16:55.630992Z debug envoy http [external/envoy/source/common/http/conn_manager_impl.cc:1337] [C10704][S1098415029100968055] request end stream

2020-08-02T15:16:55.631007Z debug envoy admin [external/envoy/source/server/http/admin_filter.cc:66] [C10704][S1098415029100968055] request complete: path: /stats?usedonly&filter=^(server.state|listener_manager.workers_started)

2020-08-02T15:16:55.637364Z debug envoy http [external/envoy/source/common/http/conn_manager_impl.cc:1710] [C10704][S1098415029100968055] encoding headers via codec (end_stream=false):

':status', '200'

'content-type', 'text/plain; charset=UTF-8'

'cache-control', 'no-cache, max-age=0'

'x-content-type-options', 'nosniff'

'date', 'Sun, 02 Aug 2020 15:16:55 GMT'

'server', 'envoy'

2020-08-02T15:16:55.637738Z debug envoy client [external/envoy/source/common/http/codec_client.cc:104] [C7] response complete

2020-08-02T15:16:55.637774Z debug envoy router [external/envoy/source/common/router/router.cc:1149] [C14064][S17228183679396321216] upstream headers complete: end_stream=true

2020-08-02T15:16:55.637830Z debug envoy http [external/envoy/source/common/http/conn_manager_impl.cc:1649] [C14064][S17228183679396321216] closing connection due to connection close header

2020-08-02T15:16:55.637847Z debug envoy http [external/envoy/source/common/http/conn_manager_impl.cc:1710] [C14064][S17228183679396321216] encoding headers via codec (end_stream=true):

':status', '200'

'date', 'Sun, 02 Aug 2020 15:16:55 GMT'

'content-length', '0'

'x-envoy-upstream-service-time', '8'

'server', 'envoy'

'connection', 'close'

2020-08-02T15:16:55.637872Z debug envoy connection [external/envoy/source/common/network/connection_impl.cc:109] [C14064] closing data_to_write=143 type=2

2020-08-02T15:16:55.637882Z debug envoy connection [external/envoy/source/common/network/connection_impl_base.cc:30] [C14064] setting delayed close timer with timeout 1000 ms

2020-08-02T15:16:55.637899Z debug envoy pool [external/envoy/source/common/http/http1/conn_pool.cc:48] [C7] response complete

2020-08-02T15:16:55.637910Z debug envoy pool [external/envoy/source/common/http/conn_pool_base.cc:93] [C7] destroying stream: 0 remaining

2020-08-02T15:16:55.637995Z debug envoy connection [external/envoy/source/common/network/connection_impl.cc:622] [C14064] write flush complete

2020-08-02T15:16:55.638888Z debug envoy connection [external/envoy/source/common/network/connection_impl.cc:514] [C14064] remote early close

2020-08-02T15:16:55.638901Z debug envoy connection [external/envoy/source/common/network/connection_impl.cc:200] [C14064] closing socket: 0

2020-08-02T15:16:55.638954Z debug envoy conn_handler [external/envoy/source/server/connection_handler_impl.cc:111] [C14064] adding to cleanup list

Two (2) Entry Points into the Istio Service Mesh

Ingress-nginx is our ingress into the Service Mesh with XtremeCloud SSO behind the proxy. Istio-ingressgateway is our Envoy ingress into the Service Mesh.

Davids-MacBook-Pro:xtremecloud-sso-minikube davidjbrewer$ minikube -p xtremecloud-istio tunnel

Status:

machine: xtremecloud-istio

pid: 18649

route: 10.96.0.0/12 -> 192.168.99.100

minikube: Running

services: [ingress-nginx, istio-ingressgateway]

errors:

minikube: no errors

router: no errors

loadbalancer emulator: no errors

Status:

machine: xtremecloud-istio

pid: 18649

route: 10.96.0.0/12 -> 192.168.99.100

minikube: Running

services: [ingress-nginx, istio-ingressgateway]

errors:

minikube: no errors

router: no errors

loadbalancer emulator: no errors