Managing a Split-Brain in the XtremeCloud Data Grid-web

Managing Consistency, Availability, and a Network Partition (CAP)

Background

Handling network partitions

JGroups does not have a special event for a network partitioning, so for XtremeCloud Data Grid-web a network partition is indistinguishable from one or more nodes crashing.

Partition handling disabled

With partition handling disabled, a node that leaves the JGroups view unexpectedly is assumed to be crashed and to only rejoin the cluster when restarted: with a different JGroups address, and without holding any data.

If a split does occur, the two partitions will be able to work separately. Writes on nodes that have not yet detected the split will block waiting for a response from every node, and they will be retried on the originator’s partition when the new cache topology is installed. Writes initiated before the split may be applied on some of the nodes in other partitions, but there is no guarantee.

When the partitions merge, XtremeCloud Data Grid-web does not attempt to merge the different values that each partition might have. The largest partition (i.e. the one with the most nodes) is assumed to be the correct one, and its topology becomes the merge topology. Data from nodes not in the merge CH is wiped, and they will receive the latest data from nodes in the merge CH.

If a node is suspected because of a Full GC, it might go from the initial JGroups view straight to the merge view. If that happens, its topology will be the largest one, and it will not be wiped, neither will it receive new data. Instead, it will keep the (possibly stale) entries it had before the Full GC.

Partition handling enabled With partition handling enabled, a node that leaves the JGroups view unexpectedly is assumed to merge back without a restart.

The cache will still install a topology without the node, and when it joins back it will receive the latest entries from the other members (wiping its own entries in the process).

After the new topology is installed on all the nodes, it becomes the stable topology. If at least half of the nodes in the stable topology leave in quick succession (i.e. before the stable topology is updated), the cache becomes Degraded and none of the keys are readable or writeable. The assumption is that the leaving nodes could be forming a partition by themselves and updating the entries, so the values in the smaller (i.e. minority) partition could be stale.

If a node leaves gracefully, the cache may become Unavailable instead of Degraded in order to signal that it can not merge back to become Available again. TODO ISPN-5060

During a merge, there are five possible scenarios:

Both (or rather all) partitions are Degraded, and together they have enough nodes to become Available.

The cache becomes Available, with the current nodes, and the stable topology is also updated.

Both partitions are Degraded, and they don’t have enough nodes together to become Available.

The cache stays Degraded, and the topology with the highest id is installed everywhere.

One partition is Available, the other is Degraded.

The entries on the Degrade partition nodes are wiped, and they receive the latest state from the Available partition nodes. The stable topology is updated afterwards.

A special case is if the Available partition didn’t yet update its topology to remove the nodes in the Degraded partition (and possibly become Degraded). Since all the nodes are present in the Available partition’s topology, none of them are wiped. And if some of the nodes in the Available partition’s consistent hash are not really accessible after the merge, the merged partition might stay Degraded.

Both partitions are Available.

This means one partition didn’t detect the split. The topology with the highest topology id is used as the merge topology. The nodes not in the merge topology are wiped, and they receive the latest state from the Available partition nodes.

One partition is Unavailable.

The cache becomes Unavailable everywhere.

While a partition is in Degraded mode, attempting to read or write a key will yield an AvailabilityException. But between a minority partition being split from the rest of the cluster and the cache becoming Degraded, partition nodes are able to read any key, even though the value might have been updated in the majority partition.

Write operations in the minority are blocked on the primary owner until the cache enters Degraded mode, and they will fail with an AvailabilityException when retried. But some of the backup owners in the majority partition could have already updated the value before the split was detected, leaving the cache inconsistent even after the merge.

If only some backup owners in the minority partition updated the value, read operations on that node will see the new value until the cache enters Degraded mode. If the other partition stayed available, the value will be replaced by the old value (or a newer one) on merge. But if the other partition also entered Degraded mode, there is no state transfer and the cache will be inconsistent.

3.1.3. Timeout errors It is sometimes possible for a remote command invocation to time out without the any node being down. For example, the NAKACK2 protocol uses a negative acknowledgement system for retransmitting messages, and in the worst case the time to retransmit a message can be bigger than the default replication timeout.

If the request from the primary owner to a backup owner times out, the update will not be applied on the primary owner, but it will still be applied on the other backup owners. If the request from the originator to the primary owner times out, the operation may still be applied successfully on all the owners.

Acquiring the lock on the primary owner can also time out. If that happens with a single-key write operation, the entry is not updated. With a multiple-key operation, the update will still be applied for keys with a different primary owner.

In general, when an application receives a TimeoutException, it can assume that the update was performed on some but not all of the nodes.

3.1.4. Asynchronous replication With asynchronous replication, write commands are sent asynchronously both from the originator to the primary owner and from the primary owner to the other nodes, and neither gets any response. The value is only updated on the originator when it receives the command forwarded by the primary owner, so a thread may not see its own updates for a short while.

The primary owner initiates the broadcast of the update while holding the key lock, and JGroups guarantees that messages from one owner will be delivered in the same order on all the targets. That means as long as the consistent hash doesn’t change, updates to the same key will be applied in the same order everywhere.

If the primary owner of a key changes, updates from the old primary owner and from the new primary owner will not be ordered, so different nodes may end up with different histories and final values.

If a node joins and becomes a backup owner after a write command was sent from the primary owner to the backups, but before the primary owner updates its own data container, it may not receive the value neither as a write command nor via state transfer.

If there is a timeout acquiring the key lock on the primary owner, the change will not be applied anywhere. As with synchronous replication, failure to acquire a lock for one key may not prevent the update of other keys in the same multiple-key write operation.

3.1.5. Shared cache store With a shared cache store, only the primary owner of each key will write to the cache store. If the primary owner node crashes, the operation is retried and the new primary owner writes the update to the shared store.

When a write to the store fails, it will fail the write operation. The same as in local mode, previous writes to other keys in the same multiple-key write operation will not be rolled back, and neither will be in-memory writes on other nodes.

With write-behind enabled, store write failures are hidden from the user. With async replication and without write-behind, errors are also hidden unless the originator is the primary owner (or write-behind is also enabled).

Assuming there were no write failures, the cluster can be restarted and it will recover the entries it has saved in its shared store (unless purging on startup is enabled in the store configuration).

Like with stores in a non-transactional cache, a read can undo an overlapping write operation’s modifications in memory.

3.1.6. Private cache store With a private cache store, each node will write its own entries to its store. This allows the use of passivation, storing each entry only in memory or only in the store.

The behaviour on store write failure is the same as with a shared store.

When the cluster is restarted, the order in which nodes are started back up matters, as state transfer will copy the state from the first node to all the other nodes. If the first node to start up has stale entries in its store, it will overwrite newer entries on the joiners.

Like with stores in a non-transactional cache, a read can undo an overlapping write operation’s modifications in memory.

3.2. Transactional mode Similar to transactional local caches, transactional replicated caches register with a transaction manager on the originator.

3.2.1. Pessimistic locking With pessimistic locking, each key is locked on its primary owner as the application writes to it. Lock-only operations also invoke a remote command on the primary owner.

When the transaction is committed, a one-phase prepare command is invoked synchronously on all the nodes. The prepare command updates the transaction modifications on all the nodes. Conditional writes are executed unconditionally at this phase, because the originator already checked the condition.

Key locks are released asynchronously, after all the nodes acknowledged the prepare command. This prevents another transaction from executing between the time the transaction is committed on the primary owner and the time it is committed on the other members.

Handling topology changes Every node knows about every transaction currently being executed. When a node joins, it receives the currently-prepared transactions from the existing nodes before accepting transactions itself. After processing a prepare command from a previous topology (with a lower topology id), a node will also forward the command to all the other nodes (synchronously).

After a topology change, the new primary owner knows that the transactions started in a previous topology must have acquired their key locks on the previous primary owner. So it doesn’t allow any new transaction to lock a key as long as there are transactions with a lower topology id.

If a node other than the originator of the transaction leaves the cluster (gracefully or not), the transaction will still succeed.

If the originator of crashes before all the other nodes have received the one-phase prepare command, however, nodes that have not received the command will assume it was aborted and roll back any local changes. This will leave the cache inconsistent, with only some nodes having applied the modifications (ISPN-5046).

Note: If the subject of write/lock operation is a key primary-owned by the originator, the lock command is not replicated to other nodes unless state transfer is in progress.

This can allow a different transaction to execute between the time the lock is acquired on the old primary owner and the time the one-phase prepare command executes on the new primary owner, breaking atomicity and leaving the key value inconsistent (ISPN-5076).

Partition handling: disabled As in non-transactional caches, the cache will become inconsistent when partition handling is disabled and the cluster does split.

Each partition installs its own consistent hash, so a key will have a primary owner on each partition and both partitions can update the value in parallel. Transactions that sent the one-phase prepare command before the split can also not guaranteed to commit on all or none of the nodes in a particular partition.

While split, each partition will be able to read its own values. When the partitions merge back, there is no effort to replicate the values from one partition to another. Instead, just like in the non-transactional case, the partition topology with the most nodes becomes the merge topology, nodes not in the merge topology are wiped, and they receive new data from the primary owners in the merge topology.

Partition handling: enabled Transactional caches use the same algorithm as non-transactional caches to enter or exit degraded mode when nodes crash or partitions merge.

Read or write operations started while the cache is not available will fail with an AvailabilityException. Between the cluster splitting and the minority partition(s) entering degraded mode, read operations will succeed, and read-only transactions will be able to commit. Write operations may block waiting for responses from nodes in the other partition(s), if the primary owner of the written key is not in the local partition. Eventually the partition detects the split and the remote lock commands will fail.

If the primary owners of the keys written by the transaction are all in the local transaction, or if the transaction acquired all its locks before the split, the originator will try to commit, and the one-phase prepare command will perform its updates on the nodes in the local partition. The commit will block on the originator while waiting for all the initial members to reply, and it will return successfully when the other partitions’ nodes are removed from the JGroups view (partial commit).

If one partition stays available, its entries will replace all the other partitions’ entries on merge, undoing partial commits in those partitions. But if all the partitions entered degraded mode, partial writes will not be rolled back, and neither will they be replicated to other nodes.

During a merge, entries and transactions started in the majority partition will be replicated to the nodes in other partitions as if those nodes just joined. However, it’s possible that a partition is only available because it didn’t detect the split yet. If there is another properly-active partition, transactions in both partitions may acquire the same key locks on different primary owners (with the single-local-key optimization) and only commit after the merge, leading to inconsistent values.

Timeout errors Timeouts acquiring key locks or communicating with the primary owners before the commit cause the transaction to be aborted, without writing any updates.

If the one-phase prepare command times out on any of the nodes, the originator will send a rollback command to all the nodes and will roll back the local changes. The nodes that already executed the one-phase prepare command will not roll back their changes, so the key value is going to be inconsistent.

On the other hand, there is no transaction timeout: once a key is locked, it is only unlocked if the transaction is committed/aborted, or if the originator is removed from the cache topology.

Conflict Management and Partition Handling

As one of the underlying open source components to XtremeCloud Data Grid-web, the Infinispan 9.1.0.Final version was overhauled to modify the behavior and configuration of partition handling in distributed and replicated caches. Partition handling is no longer simply enabled/disabled. Instead a partition strategy is configured. This allows for more fine-grained control of a cache’s behavior when a split-brain scenario occurs. Furthermore, a ConflictManager component was created so that conflicts on cache entries can be automatically resolved on-demand by clients and/or automatically during partition merges.

Note: XtremeCloud SSO Version 3.0.1 uses Infinispan 9.3.1 for client purposes and calls the remote Kubernetes service for XtremeCloud Data Grid-web Version 3.0.1, which is based on Infinispan 9.3.3. For a complete list compatibilities, please refer to the Certification Matrix.

Conflict Manager

During a cache’s lifecycle it is possible for inconsistencies to appear between replicas of a cache entry due to a variety of reasons (e.g., replication failures, incorrect use of flags, etc.). The ConflictManager is a tool that allows users to retrieve all stored replica values for a cache entry. In addition to allowing users to process a stream of cache entries whose stored replicas have conflicting values. Furthermore, by utilizing implementations of the EntryMergePolicy interface it is possible for the aforementioned conflicts to be resolved deterministically.

The ConflictManager is a tool that allows clients to retrieve all stored replica values for a given key, in addition to allowing clients to process a stream of cache entries whose stored replicas have conflicting values. Furthermore, by utilizing implementations of the EntryMergePolicy interface it is possible for known conflicts to be resolved automatically.

Detecting Conflicts

Conflicts are detected by retrieving each of the stored values for a given key. The ConflictManager retrieves the value stored from each of the key’s write owners defined by the current consistent hash. The .equals method of the stored values is then used to determine whether all values are equal. If all values are equal then no conflicts exist for the key, otherwise a conflict has occurred. Note that null values are returned if no entry exists on a given node, therefore we deem a conflict to have occurred if both a null and non-null value exists for a given key.

Merge Policies

In the event of conflicts arising between one or more replicas of a given CacheEntry from XtremeCloud SSO, it is necessary for a conflict resolution algorithm to be defined, therefore we use the EntryMergePolicy interface. This interface consists of a single method, merge, whose returned CacheEntry is utilized as the “resolved” entry for a given key. When a non-null CacheEntry is returned, this entries value is put to all replicas in the cache. However when the merge implementation returns a null value, all replicas associated with the conflicting key are removed from the cache.

The merge method takes two parameters: the preferredEntry and otherEntries. In the context of a partition merge, the preferredEntry is the CacheEntry associated with the partition whose coordinator is conducting the merge (or if multiple entries exist in this partition, it’s the primary replica). However, in all other contexts, the preferredEntry is simply the primary replica. The second parameter, otherEntries is simply a list of all other entries associated with the key for which a conflict was detected.

Note: EntryMergePolicy::merge is only called when a conflict has been detected, it is not called if all CacheEntrys are the same.

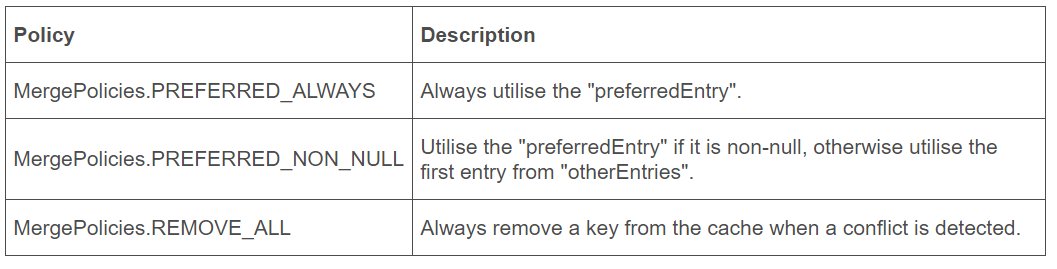

Currently XtremeCloud Data Grid-web provides the following implementations of EntryMergePolicy:

Policy Description

Application Usage

For conflict resolution during partition merges, once an EntryMergePolicy has been configured for the cache, no additional actions are required by the user. However, if an XtremeCloud Data Grid-web user would like to utilize the ConflictManager explicitly in their application, it should be retrieved by passing an AdvancedCache instance to the ConflictManagerFactory.

EmbeddedCacheManager manager = new DefaultCacheManager("example-config.xml");

Cache<Integer, String> cache = manager.getCache("testCache");

ConflictManager<Integer, String> crm = ConflictManagerFactory.get(cache.getAdvancedCache());

// Get All Versions of Key

Map<Address, InternalCacheValue<String>> versions = crm.getAllVersions(1);

// Process conflicts stream and perform some operation on the cache

Stream<Map<Address, InternalCacheEntry<Integer, String>>> stream = crm.getConflicts();

stream.forEach(map -> {

CacheEntry<Object, Object> entry = map.values().iterator().next();

Object conflictKey = entry.getKey();

cache.remove(conflictKey);

});

// Detect and then resolve conflicts using the configured EntryMergePolicy

crm.resolveConflicts();

// Detect and then resolve conflicts using the passed EntryMergePolicy instance

crm.resolveConflicts((preferredEntry, otherEntries) -> preferredEntry);

view rawconflictmanagerusage.java

Note: Depending on the number of entries in the cache, the getConflicts and resolveConflict methods are expensive operations, as they both depend on a spliterator which lazily loads cache entries on a per segment basis. Consequently, when operating in distributed mode, if many conflicts exist, it is possible for an OutOfMemoryException to occur on the Kubernetes pod that is searching for conflicts.

Partition Handling Strategies

In 9.1.0.Final the partition handling enabled/disabled option has been deprecated and users must now configure an appropriate PartitionHandling strategy for their application. A partition handling strategy determines what operations can be performed on a cache when a split brain event has occurred. Ultimately, in terms of Brewer’s CAP theorem, the configured strategy determines whether the cache’s availability or consistency is sacrificed in the presence of partition(s). Below is a table of the provided strategies and their characteristics:

Strategy Description CAP DENY_READ_WRITES If the partition does not have all owners for a given segment, both reads and writes are denied for all keys in that segment.

This is equivalent to setting partition handling to true in Infinispan 9.0. Consistency ALLOW_READS Allows reads for a given key if it exists in this partition, but only allows writes if this partition contains all owners of a segment. Availability ALLOW_READ_WRITES Allow entries on each partition to diverge, with conflicts resolved during merge.

This is equivalent to setting partition handling to false in Infinispan 9.0. Availability

Conflict Resolution on Partition Merge

When utilizing the ALLOW_READ_WRITES partition strategy it is possible for the values of cache entries to diverge between competing partitions. Therefore, when the two partitions merge, it is necessary for these conflicts to be resolved. Internally XtremeCloud Data Grid-web utilises a cache’s ConflictManager to search for cache entry conflicts and then applies the configured EntryMergePolicy to automatically resolve said conflicts before rebalancing the cache. This conflict resolution is completely automatic and does not require any additional code or input from XtremeCloud Data Grid-web users.

Note, that if you do not want conflicts to be resolved automatically during a partition merge, i.e. the behaviour before 9.1.x, you can set the merge-policy to null (or NONE in xml).

Configuration Programmatic

Configuration configuration = new ConfigurationBuilder() .clustering() .partitionHandling() .whenSplit(PartitionHandling.ALLOW_READ_WRITES) .mergePolicy(MergePolicies.PREFERRED_ALWAYS) .build();

view rawpartitionhandlingconf.xml

Conclusion

Partition handling has been overhauled in Infinispan 9.1.0.Final to allow for increased control over a cache’s behavior. We have introduced the ConflictManager which enables users to inspect and manage the consistency of their cache entries via custom and provided merge policies.

Additional Information

With DENY_READS_WRITES open source XtremeCloud Data Grid-web tries to keep cache consistent, but it’s not always possible due to Two Generals’ Problem. So when the split occurs during a write it is possible that the value on different owners diverges, and there’s nothing you could do about it. Such inconsistency may happen even during regular operation (without partition) e.g., when some timeouts are exceeded. Basically when your operation ends with an exception, there’s a chance that the owners will be inconsistent. So you need to code your application so that it can live in such state (providing wrong data or failing some operations gracefully and not crashing completely).

When the cluster joins back together, there’s the reconciliation process that tries to fix these inconsistencies - make all owners hold the same value. By configuring MergePolicy.NONE, this process is disabled. It’s up to you if you don’t mind that cache.get() may return different values, but I guess that you’re better off eventually getting into consistent state.

Troubleshooting Example

18:47:08,814 ERROR [org.infinispan.interceptors.impl.InvocationContextInterceptor] (default task-4464) ISPN000136: Error executing command GetKeyValueCommand, writing keys []: org.infinispan.partitionhandling.AvailabilityException: ISPN000306: Key '4e5b1e8f-d0ec-4e49-bbe5-64f08e42e914' is not available. Not all owners are in this partition

at org.infinispan.partitionhandling.impl.PartitionHandlingInterceptor.handleDataReadReturn(PartitionHandlingInterceptor.java:154)

at org.infinispan.interceptors.InvocationFinallyAction.apply(InvocationFinallyAction.java:21)

at org.infinispan.interceptors.impl.SimpleAsyncInvocationStage.addCallback(SimpleAsyncInvocationStage.java:70)

at org.infinispan.interceptors.InvocationStage.andFinally(InvocationStage.java:60)

at org.infinispan.interceptors.BaseAsyncInterceptor.invokeNextAndFinally(BaseAsyncInterceptor.java:157)

at org.infinispan.partitionhandling.impl.PartitionHandlingInterceptor.handleDataReadCommand(PartitionHandlingInterceptor.java:140)

at org.infinispan.partitionhandling.impl.PartitionHandlingInterceptor.visitGetKeyValueCommand(PartitionHandlingInterceptor.java:130)

at org.infinispan.commands.read.GetKeyValueCommand.acceptVisitor(GetKeyValueCommand.java:39)

at org.infinispan.interceptors.BaseAsyncInterceptor.invokeNextAndHandle(BaseAsyncInterceptor.java:183)

at org.infinispan.interceptors.impl.BaseStateTransferInterceptor.handleReadCommand(BaseStateTransferInterceptor.java:185)

at org.infinispan.interceptors.impl.BaseStateTransferInterceptor.visitGetKeyValueCommand(BaseStateTransferInterceptor.java:168)

at org.infinispan.commands.read.GetKeyValueCommand.acceptVisitor(GetKeyValueCommand.java:39)

at org.infinispan.interceptors.BaseAsyncInterceptor.invokeNext(BaseAsyncInterceptor.java:54)

at org.infinispan.interceptors.DDAsyncInterceptor.handleDefault(DDAsyncInterceptor.java:54)

at org.infinispan.interceptors.DDAsyncInterceptor.visitGetKeyValueCommand(DDAsyncInterceptor.java:106)

at org.infinispan.commands.read.GetKeyValueCommand.acceptVisitor(GetKeyValueCommand.java:39)

at org.infinispan.interceptors.BaseAsyncInterceptor.invokeNextAndExceptionally(BaseAsyncInterceptor.java:123)

at org.infinispan.interceptors.impl.InvocationContextInterceptor.visitCommand(InvocationContextInterceptor.java:90)

at org.infinispan.interceptors.BaseAsyncInterceptor.invokeNext(BaseAsyncInterceptor.java:56)

at org.infinispan.interceptors.DDAsyncInterceptor.handleDefault(DDAsyncInterceptor.java:54)

at org.infinispan.interceptors.DDAsyncInterceptor.visitGetKeyValueCommand(DDAsyncInterceptor.java:106)

at org.infinispan.commands.read.GetKeyValueCommand.acceptVisitor(GetKeyValueCommand.java:39)

at org.infinispan.interceptors.DDAsyncInterceptor.visitCommand(DDAsyncInterceptor.java:50)

at org.infinispan.interceptors.impl.AsyncInterceptorChainImpl.invoke(AsyncInterceptorChainImpl.java:248)

at org.infinispan.cache.impl.CacheImpl.get(CacheImpl.java:479)

at org.infinispan.cache.impl.CacheImpl.get(CacheImpl.java:472)

at org.infinispan.cache.impl.AbstractDelegatingCache.get(AbstractDelegatingCache.java:348)

at org.infinispan.cache.impl.EncoderCache.get(EncoderCache.java:659)

at org.infinispan.cache.impl.AbstractDelegatingCache.get(AbstractDelegatingCache.java:348)

at org.keycloak.models.sessions.infinispan.changes.InfinispanChangelogBasedTransaction.get(InfinispanChangelogBasedTransaction.java:120)

at org.keycloak.models.sessions.infinispan.InfinispanUserSessionProvider.getClientSessionEntity(InfinispanUserSessionProvider.java:289)

at org.keycloak.models.sessions.infinispan.InfinispanUserSessionProvider.getClientSession(InfinispanUserSessionProvider.java:283)

at org.keycloak.models.sessions.infinispan.UserSessionAdapter.lambda$getAuthenticatedClientSessions$0(UserSessionAdapter.java:92)

at java.util.concurrent.ConcurrentHashMap.forEach(ConcurrentHashMap.java:1597)

at org.keycloak.models.sessions.infinispan.entities.AuthenticatedClientSessionStore.forEach(AuthenticatedClientSessionStore.java:59)

at org.keycloak.models.sessions.infinispan.UserSessionAdapter.getAuthenticatedClientSessions(UserSessionAdapter.java:88)

at org.keycloak.services.managers.AuthenticationManager.browserLogoutAllClients(AuthenticationManager.java:535)

at org.keycloak.services.managers.AuthenticationManager.browserLogout(AuthenticationManager.java:517)

at org.keycloak.protocol.oidc.endpoints.LogoutEndpoint.logout(LogoutEndpoint.java:138)

at sun.reflect.GeneratedMethodAccessor691.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.jboss.resteasy.core.MethodInjectorImpl.invoke(MethodInjectorImpl.java:140)

at org.jboss.resteasy.core.ResourceMethodInvoker.internalInvokeOnTarget(ResourceMethodInvoker.java:509)

at org.jboss.resteasy.core.ResourceMethodInvoker.invokeOnTargetAfterFilter(ResourceMethodInvoker.java:399)

at org.jboss.resteasy.core.ResourceMethodInvoker.lambda$invokeOnTarget$0(ResourceMethodInvoker.java:363)

at org.jboss.resteasy.core.interception.PreMatchContainerRequestContext.filter(PreMatchContainerRequestContext.java:358)

at org.jboss.resteasy.core.ResourceMethodInvoker.invokeOnTarget(ResourceMethodInvoker.java:365)

at org.jboss.resteasy.core.ResourceMethodInvoker.invoke(ResourceMethodInvoker.java:337)

at org.jboss.resteasy.core.ResourceLocatorInvoker.invokeOnTargetObject(ResourceLocatorInvoker.java:137)

at org.jboss.resteasy.core.ResourceLocatorInvoker.invoke(ResourceLocatorInvoker.java:106)

at org.jboss.resteasy.core.ResourceLocatorInvoker.invokeOnTargetObject(ResourceLocatorInvoker.java:132)

at org.jboss.resteasy.core.ResourceLocatorInvoker.invoke(ResourceLocatorInvoker.java:100)

at org.jboss.resteasy.core.SynchronousDispatcher.invoke(SynchronousDispatcher.java:443)

at org.jboss.resteasy.core.SynchronousDispatcher.lambda$invoke$4(SynchronousDispatcher.java:233)

at org.jboss.resteasy.core.SynchronousDispatcher.lambda$preprocess$0(SynchronousDispatcher.java:139)

at org.jboss.resteasy.core.interception.PreMatchContainerRequestContext.filter(PreMatchContainerRequestContext.java:358)

at org.jboss.resteasy.core.SynchronousDispatcher.preprocess(SynchronousDispatcher.java:142)

at org.jboss.resteasy.core.SynchronousDispatcher.invoke(SynchronousDispatcher.java:219)

at org.jboss.resteasy.plugins.server.servlet.ServletContainerDispatcher.service(ServletContainerDispatcher.java:227)

at org.jboss.resteasy.plugins.server.servlet.HttpServletDispatcher.service(HttpServletDispatcher.java:56)

at org.jboss.resteasy.plugins.server.servlet.HttpServletDispatcher.service(HttpServletDispatcher.java:51)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:791)

at io.undertow.servlet.handlers.ServletHandler.handleRequest(ServletHandler.java:74)

at io.undertow.servlet.handlers.FilterHandler$FilterChainImpl.doFilter(FilterHandler.java:129)

at org.keycloak.services.filters.KeycloakSessionServletFilter.doFilter(KeycloakSessionServletFilter.java:90)

at io.undertow.servlet.core.ManagedFilter.doFilter(ManagedFilter.java:61)

at io.undertow.servlet.handlers.FilterHandler$FilterChainImpl.doFilter(FilterHandler.java:131)

at io.undertow.servlet.handlers.FilterHandler.handleRequest(FilterHandler.java:84)

at io.undertow.servlet.handlers.security.ServletSecurityRoleHandler.handleRequest(ServletSecurityRoleHandler.java:62)

at io.undertow.servlet.handlers.ServletChain$1.handleRequest(ServletChain.java:68)

at io.undertow.servlet.handlers.ServletDispatchingHandler.handleRequest(ServletDispatchingHandler.java:36)

at org.wildfly.extension.undertow.security.SecurityContextAssociationHandler.handleRequest(SecurityContextAssociationHandler.java:78)

at io.undertow.server.handlers.PredicateHandler.handleRequest(PredicateHandler.java:43)

at io.undertow.servlet.handlers.security.SSLInformationAssociationHandler.handleRequest(SSLInformationAssociationHandler.java:132)

at io.undertow.servlet.handlers.security.ServletAuthenticationCallHandler.handleRequest(ServletAuthenticationCallHandler.java:57)

at io.undertow.server.handlers.PredicateHandler.handleRequest(PredicateHandler.java:43)

at io.undertow.security.handlers.AbstractConfidentialityHandler.handleRequest(AbstractConfidentialityHandler.java:46)

at io.undertow.servlet.handlers.security.ServletConfidentialityConstraintHandler.handleRequest(ServletConfidentialityConstraintHandler.java:64)

at io.undertow.security.handlers.AuthenticationMechanismsHandler.handleRequest(AuthenticationMechanismsHandler.java:60)

at io.undertow.servlet.handlers.security.CachedAuthenticatedSessionHandler.handleRequest(CachedAuthenticatedSessionHandler.java:77)

at io.undertow.security.handlers.NotificationReceiverHandler.handleRequest(NotificationReceiverHandler.java:50)

at io.undertow.security.handlers.AbstractSecurityContextAssociationHandler.handleRequest(AbstractSecurityContextAssociationHandler.java:43)

at io.undertow.server.handlers.PredicateHandler.handleRequest(PredicateHandler.java:43)

at org.wildfly.extension.undertow.security.jacc.JACCContextIdHandler.handleRequest(JACCContextIdHandler.java:61)

at io.undertow.server.handlers.PredicateHandler.handleRequest(PredicateHandler.java:43)

at org.wildfly.extension.undertow.deployment.GlobalRequestControllerHandler.handleRequest(GlobalRequestControllerHandler.java:68)

at io.undertow.server.handlers.PredicateHandler.handleRequest(PredicateHandler.java:43)

at io.undertow.servlet.handlers.ServletInitialHandler.handleFirstRequest(ServletInitialHandler.java:292)

at io.undertow.servlet.handlers.ServletInitialHandler.access$100(ServletInitialHandler.java:81)

at io.undertow.servlet.handlers.ServletInitialHandler$2.call(ServletInitialHandler.java:138)

at io.undertow.servlet.handlers.ServletInitialHandler$2.call(ServletInitialHandler.java:135)

at io.undertow.servlet.core.ServletRequestContextThreadSetupAction$1.call(ServletRequestContextThreadSetupAction.java:48)

at io.undertow.servlet.core.ContextClassLoaderSetupAction$1.call(ContextClassLoaderSetupAction.java:43)

at org.wildfly.extension.undertow.security.SecurityContextThreadSetupAction.lambda$create$0(SecurityContextThreadSetupAction.java:105)

at org.wildfly.extension.undertow.deployment.UndertowDeploymentInfoService$UndertowThreadSetupAction.lambda$create$0(UndertowDeploymentInfoService.java:1502)

at org.wildfly.extension.undertow.deployment.UndertowDeploymentInfoService$UndertowThreadSetupAction.lambda$create$0(UndertowDeploymentInfoService.java:1502)

at org.wildfly.extension.undertow.deployment.UndertowDeploymentInfoService$UndertowThreadSetupAction.lambda$create$0(UndertowDeploymentInfoService.java:1502)

at org.wildfly.extension.undertow.deployment.UndertowDeploymentInfoService$UndertowThreadSetupAction.lambda$create$0(UndertowDeploymentInfoService.java:1502)

at io.undertow.servlet.handlers.ServletInitialHandler.dispatchRequest(ServletInitialHandler.java:272)

at io.undertow.servlet.handlers.ServletInitialHandler.access$000(ServletInitialHandler.java:81)

at io.undertow.servlet.handlers.ServletInitialHandler$1.handleRequest(ServletInitialHandler.java:104)

at io.undertow.server.Connectors.executeRootHandler(Connectors.java:360)

at io.undertow.server.HttpServerExchange$1.run(HttpServerExchange.java:830)

at org.jboss.threads.ContextClassLoaderSavingRunnable.run(ContextClassLoaderSavingRunnable.java:35)

at org.jboss.threads.EnhancedQueueExecutor.safeRun(EnhancedQueueExecutor.java:1985)

at org.jboss.threads.EnhancedQueueExecutor$ThreadBody.doRunTask(EnhancedQueueExecutor.java:1487)

at org.jboss.threads.EnhancedQueueExecutor$ThreadBody.run(EnhancedQueueExecutor.java:1378)

at java.lang.Thread.run(Thread.java:748)

18:47:08,817 ERROR [org.keycloak.services.error.KeycloakErrorHandler] (default task-4464) Uncaught server error: org.infinispan.partitionhandling.AvailabilityException: ISPN000306: Key '4e5b1e8f-d0ec-4e49-bbe5-64f08e42e914' is not available. Not all owners are in this partition

at org.infinispan.partitionhandling.impl.PartitionHandlingInterceptor.handleDataReadReturn(PartitionHandlingInterceptor.java:154)

at org.infinispan.interceptors.InvocationFinallyAction.apply(InvocationFinallyAction.java:21)

at org.infinispan.interceptors.impl.SimpleAsyncInvocationStage.addCallback(SimpleAsyncInvocationStage.java:70)

at org.infinispan.interceptors.InvocationStage.andFinally(InvocationStage.java:60)

at org.infinispan.interceptors.BaseAsyncInterceptor.invokeNextAndFinally(BaseAsyncInterceptor.java:157)

at org.infinispan.partitionhandling.impl.PartitionHandlingInterceptor.handleDataReadCommand(PartitionHandlingInterceptor.java:140)

at org.infinispan.partitionhandling.impl.PartitionHandlingInterceptor.visitGetKeyValueCommand(PartitionHandlingInterceptor.java:130)

at org.infinispan.commands.read.GetKeyValueCommand.acceptVisitor(GetKeyValueCommand.java:39)

at org.infinispan.interceptors.BaseAsyncInterceptor.invokeNextAndHandle(BaseAsyncInterceptor.java:183)

at org.infinispan.interceptors.impl.BaseStateTransferInterceptor.handleReadCommand(BaseStateTransferInterceptor.java:185)

at org.infinispan.interceptors.impl.BaseStateTransferInterceptor.visitGetKeyValueCommand(BaseStateTransferInterceptor.java:168)

at org.infinispan.commands.read.GetKeyValueCommand.acceptVisitor(GetKeyValueCommand.java:39)

at org.infinispan.interceptors.BaseAsyncInterceptor.invokeNext(BaseAsyncInterceptor.java:54)

at org.infinispan.interceptors.DDAsyncInterceptor.handleDefault(DDAsyncInterceptor.java:54)

at org.infinispan.interceptors.DDAsyncInterceptor.visitGetKeyValueCommand(DDAsyncInterceptor.java:106)

at org.infinispan.commands.read.GetKeyValueCommand.acceptVisitor(GetKeyValueCommand.java:39)

at org.infinispan.interceptors.BaseAsyncInterceptor.invokeNextAndExceptionally(BaseAsyncInterceptor.java:123)

at org.infinispan.interceptors.impl.InvocationContextInterceptor.visitCommand(InvocationContextInterceptor.java:90)

at org.infinispan.interceptors.BaseAsyncInterceptor.invokeNext(BaseAsyncInterceptor.java:56)

at org.infinispan.interceptors.DDAsyncInterceptor.handleDefault(DDAsyncInterceptor.java:54)

at org.infinispan.interceptors.DDAsyncInterceptor.visitGetKeyValueCommand(DDAsyncInterceptor.java:106)

at org.infinispan.commands.read.GetKeyValueCommand.acceptVisitor(GetKeyValueCommand.java:39)

at org.infinispan.interceptors.DDAsyncInterceptor.visitCommand(DDAsyncInterceptor.java:50)

at org.infinispan.interceptors.impl.AsyncInterceptorChainImpl.invoke(AsyncInterceptorChainImpl.java:248)

at org.infinispan.cache.impl.CacheImpl.get(CacheImpl.java:479)

at org.infinispan.cache.impl.CacheImpl.get(CacheImpl.java:472)

at org.infinispan.cache.impl.AbstractDelegatingCache.get(AbstractDelegatingCache.java:348)

at org.infinispan.cache.impl.EncoderCache.get(EncoderCache.java:659)

at org.infinispan.cache.impl.AbstractDelegatingCache.get(AbstractDelegatingCache.java:348)

at org.keycloak.models.sessions.infinispan.changes.InfinispanChangelogBasedTransaction.get(InfinispanChangelogBasedTransaction.java:120)

at org.keycloak.models.sessions.infinispan.InfinispanUserSessionProvider.getClientSessionEntity(InfinispanUserSessionProvider.java:289)

at org.keycloak.models.sessions.infinispan.InfinispanUserSessionProvider.getClientSession(InfinispanUserSessionProvider.java:283)

at org.keycloak.models.sessions.infinispan.UserSessionAdapter.lambda$getAuthenticatedClientSessions$0(UserSessionAdapter.java:92)

at java.util.concurrent.ConcurrentHashMap.forEach(ConcurrentHashMap.java:1597)

at org.keycloak.models.sessions.infinispan.entities.AuthenticatedClientSessionStore.forEach(AuthenticatedClientSessionStore.java:59)

at org.keycloak.models.sessions.infinispan.UserSessionAdapter.getAuthenticatedClientSessions(UserSessionAdapter.java:88)

at org.keycloak.services.managers.AuthenticationManager.browserLogoutAllClients(AuthenticationManager.java:535)

at org.keycloak.services.managers.AuthenticationManager.browserLogout(AuthenticationManager.java:517)

at org.keycloak.protocol.oidc.endpoints.LogoutEndpoint.logout(LogoutEndpoint.java:138)

at sun.reflect.GeneratedMethodAccessor691.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.jboss.resteasy.core.MethodInjectorImpl.invoke(MethodInjectorImpl.java:140)

at org.jboss.resteasy.core.ResourceMethodInvoker.internalInvokeOnTarget(ResourceMethodInvoker.java:509)

at org.jboss.resteasy.core.ResourceMethodInvoker.invokeOnTargetAfterFilter(ResourceMethodInvoker.java:399)

at org.jboss.resteasy.core.ResourceMethodInvoker.lambda$invokeOnTarget$0(ResourceMethodInvoker.java:363)

at org.jboss.resteasy.core.interception.PreMatchContainerRequestContext.filter(PreMatchContainerRequestContext.java:358)

at org.jboss.resteasy.core.ResourceMethodInvoker.invokeOnTarget(ResourceMethodInvoker.java:365)

at org.jboss.resteasy.core.ResourceMethodInvoker.invoke(ResourceMethodInvoker.java:337)

at org.jboss.resteasy.core.ResourceLocatorInvoker.invokeOnTargetObject(ResourceLocatorInvoker.java:137)

at org.jboss.resteasy.core.ResourceLocatorInvoker.invoke(ResourceLocatorInvoker.java:106)

at org.jboss.resteasy.core.ResourceLocatorInvoker.invokeOnTargetObject(ResourceLocatorInvoker.java:132)

at org.jboss.resteasy.core.ResourceLocatorInvoker.invoke(ResourceLocatorInvoker.java:100)

at org.jboss.resteasy.core.SynchronousDispatcher.invoke(SynchronousDispatcher.java:443)

at org.jboss.resteasy.core.SynchronousDispatcher.lambda$invoke$4(SynchronousDispatcher.java:233)

at org.jboss.resteasy.core.SynchronousDispatcher.lambda$preprocess$0(SynchronousDispatcher.java:139)

at org.jboss.resteasy.core.interception.PreMatchContainerRequestContext.filter(PreMatchContainerRequestContext.java:358)

at org.jboss.resteasy.core.SynchronousDispatcher.preprocess(SynchronousDispatcher.java:142)

at org.jboss.resteasy.core.SynchronousDispatcher.invoke(SynchronousDispatcher.java:219)

at org.jboss.resteasy.plugins.server.servlet.ServletContainerDispatcher.service(ServletContainerDispatcher.java:227)

at org.jboss.resteasy.plugins.server.servlet.HttpServletDispatcher.service(HttpServletDispatcher.java:56)

at org.jboss.resteasy.plugins.server.servlet.HttpServletDispatcher.service(HttpServletDispatcher.java:51)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:791)

at io.undertow.servlet.handlers.ServletHandler.handleRequest(ServletHandler.java:74)

at io.undertow.servlet.handlers.FilterHandler$FilterChainImpl.doFilter(FilterHandler.java:129)

at org.keycloak.services.filters.KeycloakSessionServletFilter.doFilter(KeycloakSessionServletFilter.java:90)

at io.undertow.servlet.core.ManagedFilter.doFilter(ManagedFilter.java:61)

at io.undertow.servlet.handlers.FilterHandler$FilterChainImpl.doFilter(FilterHandler.java:131)

at io.undertow.servlet.handlers.FilterHandler.handleRequest(FilterHandler.java:84)

at io.undertow.servlet.handlers.security.ServletSecurityRoleHandler.handleRequest(ServletSecurityRoleHandler.java:62)

at io.undertow.servlet.handlers.ServletChain$1.handleRequest(ServletChain.java:68)

at io.undertow.servlet.handlers.ServletDispatchingHandler.handleRequest(ServletDispatchingHandler.java:36)

at org.wildfly.extension.undertow.security.SecurityContextAssociationHandler.handleRequest(SecurityContextAssociationHandler.java:78)

at io.undertow.server.handlers.PredicateHandler.handleRequest(PredicateHandler.java:43)

at io.undertow.servlet.handlers.security.SSLInformationAssociationHandler.handleRequest(SSLInformationAssociationHandler.java:132)

at io.undertow.servlet.handlers.security.ServletAuthenticationCallHandler.handleRequest(ServletAuthenticationCallHandler.java:57)

at io.undertow.server.handlers.PredicateHandler.handleRequest(PredicateHandler.java:43)

at io.undertow.security.handlers.AbstractConfidentialityHandler.handleRequest(AbstractConfidentialityHandler.java:46)

at io.undertow.servlet.handlers.security.ServletConfidentialityConstraintHandler.handleRequest(ServletConfidentialityConstraintHandler.java:64)

at io.undertow.security.handlers.AuthenticationMechanismsHandler.handleRequest(AuthenticationMechanismsHandler.java:60)

at io.undertow.servlet.handlers.security.CachedAuthenticatedSessionHandler.handleRequest(CachedAuthenticatedSessionHandler.java:77)

at io.undertow.security.handlers.NotificationReceiverHandler.handleRequest(NotificationReceiverHandler.java:50)

at io.undertow.security.handlers.AbstractSecurityContextAssociationHandler.handleRequest(AbstractSecurityContextAssociationHandler.java:43)

at io.undertow.server.handlers.PredicateHandler.handleRequest(PredicateHandler.java:43)

at org.wildfly.extension.undertow.security.jacc.JACCContextIdHandler.handleRequest(JACCContextIdHandler.java:61)

at io.undertow.server.handlers.PredicateHandler.handleRequest(PredicateHandler.java:43)

at org.wildfly.extension.undertow.deployment.GlobalRequestControllerHandler.handleRequest(GlobalRequestControllerHandler.java:68)

at io.undertow.server.handlers.PredicateHandler.handleRequest(PredicateHandler.java:43)

at io.undertow.servlet.handlers.ServletInitialHandler.handleFirstRequest(ServletInitialHandler.java:292)

at io.undertow.servlet.handlers.ServletInitialHandler.access$100(ServletInitialHandler.java:81)

at io.undertow.servlet.handlers.ServletInitialHandler$2.call(ServletInitialHandler.java:138)

at io.undertow.servlet.handlers.ServletInitialHandler$2.call(ServletInitialHandler.java:135)

at io.undertow.servlet.core.ServletRequestContextThreadSetupAction$1.call(ServletRequestContextThreadSetupAction.java:48)

at io.undertow.servlet.core.ContextClassLoaderSetupAction$1.call(ContextClassLoaderSetupAction.java:43)

at org.wildfly.extension.undertow.security.SecurityContextThreadSetupAction.lambda$create$0(SecurityContextThreadSetupAction.java:105)

at org.wildfly.extension.undertow.deployment.UndertowDeploymentInfoService$UndertowThreadSetupAction.lambda$create$0(UndertowDeploymentInfoService.java:1502)

at org.wildfly.extension.undertow.deployment.UndertowDeploymentInfoService$UndertowThreadSetupAction.lambda$create$0(UndertowDeploymentInfoService.java:1502)

at org.wildfly.extension.undertow.deployment.UndertowDeploymentInfoService$UndertowThreadSetupAction.lambda$create$0(UndertowDeploymentInfoService.java:1502)

at org.wildfly.extension.undertow.deployment.UndertowDeploymentInfoService$UndertowThreadSetupAction.lambda$create$0(UndertowDeploymentInfoService.java:1502)

at io.undertow.servlet.handlers.ServletInitialHandler.dispatchRequest(ServletInitialHandler.java:272)

at io.undertow.servlet.handlers.ServletInitialHandler.access$000(ServletInitialHandler.java:81)

at io.undertow.servlet.handlers.ServletInitialHandler$1.handleRequest(ServletInitialHandler.java:104)

at io.undertow.server.Connectors.executeRootHandler(Connectors.java:360)

at io.undertow.server.HttpServerExchange$1.run(HttpServerExchange.java:830)

at org.jboss.threads.ContextClassLoaderSavingRunnable.run(ContextClassLoaderSavingRunnable.java:35)

at org.jboss.threads.EnhancedQueueExecutor.safeRun(EnhancedQueueExecutor.java:1985)

at org.jboss.threads.EnhancedQueueExecutor$ThreadBody.doRunTask(EnhancedQueueExecutor.java:1487)

at org.jboss.threads.EnhancedQueueExecutor$ThreadBody.run(EnhancedQueueExecutor.java:1378)

at java.lang.Thread.run(Thread.java:748)

Suppressed: org.infinispan.util.logging.TraceException

at org.infinispan.interceptors.impl.SimpleAsyncInvocationStage.get(SimpleAsyncInvocationStage.java:41)

at org.infinispan.interceptors.impl.AsyncInterceptorChainImpl.invoke(AsyncInterceptorChainImpl.java:250)

... 85 more

What are the possibilities that caused this error? If you have been adjusting the partitioning strategy, outside of what Eupraxia Labs delivers in the ***cluster-

Here’s another error due to an unexpected XtremeCloud SSO pod failure:

Sep 19 09:00:32 sso-dev-xtremecloud-sso-gcp-0 xtremecloud-sso-gcp ERROR 14:00:32,700 ERROR [org.infinispan.interceptors.impl.InvocationContextInterceptor] (default task-6639) ISPN000136: Error executing command EntrySetCommand, writing keys []: org.infinispan.partitionhandling.AvailabilityException: ISPN000305: Cluster is operating in degraded mode because of node failures.

at org.infinispan.partitionhandling.impl.PartitionHandlingManagerImpl.checkBulkRead(PartitionHandlingManagerImpl.java:114)

at org.infinispan.partitionhandling.impl.PartitionHandlingInterceptor.visitEntrySetCommand(PartitionHandlingInterceptor.java:122)

at org.infinispan.commands.read.EntrySetCommand.acceptVisitor(EntrySetCommand.java:61)

at org.infinispan.interceptors.BaseAsyncInterceptor.invokeNext(BaseAsyncInterceptor.java:54)

at org.infinispan.statetransfer.StateTransferInterceptor.handleDefault(StateTransferInterceptor.java:352)

at org.infinispan.interceptors.DDAsyncInterceptor.visitEntrySetCommand(DDAsyncInterceptor.java:127)

at org.infinispan.commands.read.EntrySetCommand.acceptVisitor(EntrySetCommand.java:61)

at org.infinispan.interceptors.BaseAsyncInterceptor.invokeNext(BaseAsyncInterceptor.java:54)

at org.infinispan.interceptors.DDAsyncInterceptor.handleDefault(DDAsyncInterceptor.java:54)

at org.infinispan.interceptors.DDAsyncInterceptor.visitEntrySetCommand(DDAsyncInterceptor.java:127)

at org.infinispan.commands.read.EntrySetCommand.acceptVisitor(EntrySetCommand.java:61)

at org.infinispan.interceptors.BaseAsyncInterceptor.invokeNextAndExceptionally(BaseAsyncInterceptor.java:123)

at org.infinispan.interceptors.impl.InvocationContextInterceptor.visitCommand(InvocationContextInterceptor.java:90)

at org.infinispan.interceptors.BaseAsyncInterceptor.invokeNextThenApply(BaseAsyncInterceptor.java:76)

at org.infinispan.interceptors.distribution.DistributionBulkInterceptor.visitEntrySetCommand(DistributionBulkInterceptor.java:59)

at org.infinispan.commands.read.EntrySetCommand.acceptVisitor(EntrySetCommand.java:61)

at org.infinispan.interceptors.DDAsyncInterceptor.visitCommand(DDAsyncInterceptor.java:50)

at org.infinispan.interceptors.impl.AsyncInterceptorChainImpl.invoke(AsyncInterceptorChainImpl.java:248)

at org.infinispan.cache.impl.CacheImpl.entrySet(CacheImpl.java:828)

at org.infinispan.cache.impl.DecoratedCache.entrySet(DecoratedCache.java:590)

at org.infinispan.cache.impl.AbstractDelegatingCache.entrySet(AbstractDelegatingCache.java:407)

at org.infinispan.cache.impl.EncoderCache.entrySet(EncoderCache.java:718)

at org.infinispan.cache.impl.AbstractDelegatingCache.entrySet(AbstractDelegatingCache.java:407)

at org.keycloak.models.sessions.infinispan.InfinispanUserSessionProvider.getActiveClientSessionStats(InfinispanUserSessionProvider.java:430)

at org.keycloak.services.resources.admin.RealmAdminResource.getClientSessionStats(RealmAdminResource.java:623)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.jboss.resteasy.core.MethodInjectorImpl.invoke(MethodInjectorImpl.java:140)

at org.jboss.resteasy.core.ResourceMethodInvoker.internalInvokeOnTarget(ResourceMethodInvoker.java:509)

at org.jboss.resteasy.core.ResourceMethodInvoker.invokeOnTargetAfterFilter(ResourceMethodInvoker.java:399)

at org.jboss.resteasy.core.ResourceMethodInvoker.lambda$invokeOnTarget$0(ResourceMethodInvoker.java:363)

at org.jboss.resteasy.core.interception.PreMatchContainerRequestContext.filter(PreMatchContainerRequestContext.java:358)

at org.jboss.resteasy.core.ResourceMethodInvoker.invokeOnTarget(ResourceMethodInvoker.java:365)

at org.jboss.resteasy.core.ResourceMethodInvoker.invoke(ResourceMethodInvoker.java:337)

at org.jboss.resteasy.core.ResourceLocatorInvoker.invokeOnTargetObject(ResourceLocatorInvoker.java:137)

at org.jboss.resteasy.core.ResourceLocatorInvoker.invoke(ResourceLocatorInvoker.java:106)

at org.jboss.resteasy.core.ResourceLocatorInvoker.invokeOnTargetObject(ResourceLocatorInvoker.java:132)

at org.jboss.resteasy.core.ResourceLocatorInvoker.invoke(ResourceLocatorInvoker.java:100)

at org.jboss.resteasy.core.SynchronousDispatcher.invoke(SynchronousDispatcher.java:443)

at org.jboss.resteasy.core.SynchronousDispatcher.lambda$invoke$4(SynchronousDispatcher.java:233)

at org.jboss.resteasy.core.SynchronousDispatcher.lambda$preprocess$0(SynchronousDispatcher.java:139)

at org.jboss.resteasy.core.interception.PreMatchContainerRequestContext.filter(PreMatchContainerRequestContext.java:358)

at org.jboss.resteasy.core.SynchronousDispatcher.preprocess(SynchronousDispatcher.java:142)

at org.jboss.resteasy.core.SynchronousDispatcher.invoke(SynchronousDispatcher.java:219)

at org.jboss.resteasy.plugins.server.servlet.ServletContainerDispatcher.service(ServletContainerDispatcher.java:227)

at org.jboss.resteasy.plugins.server.servlet.HttpServletDispatcher.service(HttpServletDispatcher.java:56)

at org.jboss.resteasy.plugins.server.servlet.HttpServletDispatcher.service(HttpServletDispatcher.java:51)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:791)

at io.undertow.servlet.handlers.ServletHandler.handleRequest(ServletHandler.java:74)

at io.undertow.servlet.handlers.FilterHandler$FilterChainImpl.doFilter(FilterHandler.java:129)

at org.keycloak.services.filters.KeycloakSessionServletFilter.doFilter(KeycloakSessionServletFilter.java:90)

at io.undertow.servlet.core.ManagedFilter.doFilter(ManagedFilter.java:61)

at io.undertow.servlet.handlers.FilterHandler$FilterChainImpl.doFilter(FilterHandler.java:131)

at io.undertow.servlet.handlers.FilterHandler.handleRequest(FilterHandler.java:84)

at io.undertow.servlet.handlers.security.ServletSecurityRoleHandler.handleRequest(ServletSecurityRoleHandler.java:62)

at io.undertow.servlet.handlers.ServletChain$1.handleRequest(ServletChain.java:68)

at io.undertow.servlet.handlers.ServletDispatchingHandler.handleRequest(ServletDispatchingHandler.java:36)

at org.wildfly.extension.undertow.security.SecurityContextAssociationHandler.handleRequest(SecurityContextAssociationHandler.java:78)

at io.undertow.server.handlers.PredicateHandler.handleRequest(PredicateHandler.java:43)

at io.undertow.servlet.handlers.security.SSLInformationAssociationHandler.handleRequest(SSLInformationAssociationHandler.java:132)

at io.undertow.servlet.handlers.security.ServletAuthenticationCallHandler.handleRequest(ServletAuthenticationCallHandler.java:57)

at io.undertow.server.handlers.PredicateHandler.handleRequest(PredicateHandler.java:43)

at io.undertow.security.handlers.AbstractConfidentialityHandler.handleRequest(AbstractConfidentialityHandler.java:46)

at io.undertow.servlet.handlers.security.ServletConfidentialityConstraintHandler.handleRequest(ServletConfidentialityConstraintHandler.java:64)

at io.undertow.security.handlers.AuthenticationMechanismsHandler.handleRequest(AuthenticationMechanismsHandler.java:60)

at io.undertow.servlet.handlers.security.CachedAuthenticatedSessionHandler.handleRequest(CachedAuthenticatedSessionHandler.java:77)

at io.undertow.security.handlers.NotificationReceiverHandler.handleRequest(NotificationReceiverHandler.java:50)

at io.undertow.security.handlers.AbstractSecurityContextAssociationHandler.handleRequest(AbstractSecurityContextAssociationHandler.java:43)

at io.undertow.server.handlers.PredicateHandler.handleRequest(PredicateHandler.java:43)

at org.wildfly.extension.undertow.security.jacc.JACCContextIdHandler.handleRequest(JACCContextIdHandler.java:61)

at io.undertow.server.handlers.PredicateHandler.handleRequest(PredicateHandler.java:43)

at org.wildfly.extension.undertow.deployment.GlobalRequestControllerHandler.handleRequest(GlobalRequestControllerHandler.java:68)

at io.undertow.server.handlers.PredicateHandler.handleRequest(PredicateHandler.java:43)

at io.undertow.servlet.handlers.ServletInitialHandler.handleFirstRequest(ServletInitialHandler.java:292)

at io.undertow.servlet.handlers.ServletInitialHandler.access$100(ServletInitialHandler.java:81)

at io.undertow.servlet.handlers.ServletInitialHandler$2.call(ServletInitialHandler.java:138)

at io.undertow.servlet.handlers.ServletInitialHandler$2.call(ServletInitialHandler.java:135)

at io.undertow.servlet.core.ServletRequestContextThreadSetupAction$1.call(ServletRequestContextThreadSetupAction.java:48)

at io.undertow.servlet.core.ContextClassLoaderSetupAction$1.call(ContextClassLoaderSetupAction.java:43)

at org.wildfly.extension.undertow.security.SecurityContextThreadSetupAction.lambda$create$0(SecurityContextThreadSetupAction.java:105)

at org.wildfly.extension.undertow.deployment.UndertowDeploymentInfoService$UndertowThreadSetupAction.lambda$create$0(UndertowDeploymentInfoService.java:1502)

at org.wildfly.extension.undertow.deployment.UndertowDeploymentInfoService$UndertowThreadSetupAction.lambda$create$0(UndertowDeploymentInfoService.java:1502)

at org.wildfly.extension.undertow.deployment.UndertowDeploymentInfoService$UndertowThreadSetupAction.lambda$create$0(UndertowDeploymentInfoService.java:1502)

at org.wildfly.extension.undertow.deployment.UndertowDeploymentInfoService$UndertowThreadSetupAction.lambda$create$0(UndertowDeploymentInfoService.java:1502)

at io.undertow.servlet.handlers.ServletInitialHandler.dispatchRequest(ServletInitialHandler.java:272)

at io.undertow.servlet.handlers.ServletInitialHandler.access$000(ServletInitialHandler.java:81)

at io.undertow.servlet.handlers.ServletInitialHandler$1.handleRequest(ServletInitialHandler.java:104)

at io.undertow.server.Connectors.executeRootHandler(Connectors.java:360)

at io.undertow.server.HttpServerExchange$1.run(HttpServerExchange.java:830)

at org.jboss.threads.ContextClassLoaderSavingRunnable.run(ContextClassLoaderSavingRunnable.java:35)

at org.jboss.threads.EnhancedQueueExecutor.safeRun(EnhancedQueueExecutor.java:1985)

at org.jboss.threads.EnhancedQueueExecutor$ThreadBody.doRunTask(EnhancedQueueExecutor.java:1487)

at org.jboss.threads.EnhancedQueueExecutor$ThreadBody.run(EnhancedQueueExecutor.java:1378)

at java.lang.Thread.run(Thread.java:748) 10.52.1.204

Sep 19 09:00:32 sso-dev-xtremecloud-sso-gcp-0 xtremecloud-sso-gcp ERROR 14:00:32,701 ERROR [org.keycloak.services.error.KeycloakErrorHandler] (default task-6639) Uncaught server error: org.infinispan.partitionhandling.AvailabilityException: ISPN000305: Cluster is operating in degraded mode because of node failures.

at org.infinispan.partitionhandling.impl.PartitionHandlingManagerImpl.checkBulkRead(PartitionHandlingManagerImpl.java:114)

at org.infinispan.partitionhandling.impl.PartitionHandlingInterceptor.visitEntrySetCommand(PartitionHandlingInterceptor.java:122)

at org.infinispan.commands.read.EntrySetCommand.acceptVisitor(EntrySetCommand.java:61)

at org.infinispan.interceptors.BaseAsyncInterceptor.invokeNext(BaseAsyncInterceptor.java:54)

at org.infinispan.statetransfer.StateTransferInterceptor.handleDefault(StateTransferInterceptor.java:352)

at org.infinispan.interceptors.DDAsyncInterceptor.visitEntrySetCommand(DDAsyncInterceptor.java:127)

at org.infinispan.commands.read.EntrySetCommand.acceptVisitor(EntrySetCommand.java:61)

at org.infinispan.interceptors.BaseAsyncInterceptor.invokeNext(BaseAsyncInterceptor.java:54)

at org.infinispan.interceptors.DDAsyncInterceptor.handleDefault(DDAsyncInterceptor.java:54)

at org.infinispan.interceptors.DDAsyncInterceptor.visitEntrySetCommand(DDAsyncInterceptor.java:127)

at org.infinispan.commands.read.EntrySetCommand.acceptVisitor(EntrySetCommand.java:61)

at org.infinispan.interceptors.BaseAsyncInterceptor.invokeNextAndExceptionally(BaseAsyncInterceptor.java:123)

at org.infinispan.interceptors.impl.InvocationContextInterceptor.visitCommand(InvocationContextInterceptor.java:90)

at org.infinispan.interceptors.BaseAsyncInterceptor.invokeNextThenApply(BaseAsyncInterceptor.java:76)

at org.infinispan.interceptors.distribution.DistributionBulkInterceptor.visitEntrySetCommand(DistributionBulkInterceptor.java:59)

at org.infinispan.commands.read.EntrySetCommand.acceptVisitor(EntrySetCommand.java:61)

at org.infinispan.interceptors.DDAsyncInterceptor.visitCommand(DDAsyncInterceptor.java:50)

at org.infinispan.interceptors.impl.AsyncInterceptorChainImpl.invoke(AsyncInterceptorChainImpl.java:248)

at org.infinispan.cache.impl.CacheImpl.entrySet(CacheImpl.java:828)

at org.infinispan.cache.impl.DecoratedCache.entrySet(DecoratedCache.java:590)

at org.infinispan.cache.impl.AbstractDelegatingCache.entrySet(AbstractDelegatingCache.java:407)

at org.infinispan.cache.impl.EncoderCache.entrySet(EncoderCache.java:718)

at org.infinispan.cache.impl.AbstractDelegatingCache.entrySet(AbstractDelegatingCache.java:407)

at org.keycloak.models.sessions.infinispan.InfinispanUserSessionProvider.getActiveClientSessionStats(InfinispanUserSessionProvider.java:430)

at org.keycloak.services.resources.admin.RealmAdminResource.getClientSessionStats(RealmAdminResource.java:623)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.jboss.resteasy.core.MethodInjectorImpl.invoke(MethodInjectorImpl.java:140)

at org.jboss.resteasy.core.ResourceMethodInvoker.internalInvokeOnTarget(ResourceMethodInvoker.java:509)

at org.jboss.resteasy.core.ResourceMethodInvoker.invokeOnTargetAfterFilter(ResourceMethodInvoker.java:399)

at org.jboss.resteasy.core.ResourceMethodInvoker.lambda$invokeOnTarget$0(ResourceMethodInvoker.java:363)

at org.jboss.resteasy.core.interception.PreMatchContainerRequestContext.filter(PreMatchContainerRequestContext.java:358)

at org.jboss.resteasy.core.ResourceMethodInvoker.invokeOnTarget(ResourceMethodInvoker.java:365)

at org.jboss.resteasy.core.ResourceMethodInvoker.invoke(ResourceMethodInvoker.java:337)

at org.jboss.resteasy.core.ResourceLocatorInvoker.invokeOnTargetObject(ResourceLocatorInvoker.java:137)

at org.jboss.resteasy.core.ResourceLocatorInvoker.invoke(ResourceLocatorInvoker.java:106)

at org.jboss.resteasy.core.ResourceLocatorInvoker.invokeOnTargetObject(ResourceLocatorInvoker.java:132)

at org.jboss.resteasy.core.ResourceLocatorInvoker.invoke(ResourceLocatorInvoker.java:100)

at org.jboss.resteasy.core.SynchronousDispatcher.invoke(SynchronousDispatcher.java:443)

at org.jboss.resteasy.core.SynchronousDispatcher.lambda$invoke$4(SynchronousDispatcher.java:233)

at org.jboss.resteasy.core.SynchronousDispatcher.lambda$preprocess$0(SynchronousDispatcher.java:139)

at org.jboss.resteasy.core.interception.PreMatchContainerRequestContext.filter(PreMatchContainerRequestContext.java:358)

at org.jboss.resteasy.core.SynchronousDispatcher.preprocess(SynchronousDispatcher.java:142)

at org.jboss.resteasy.core.SynchronousDispatcher.invoke(SynchronousDispatcher.java:219)

at org.jboss.resteasy.plugins.server.servlet.ServletContainerDispatcher.service(ServletContainerDispatcher.java:227)

at org.jboss.resteasy.plugins.server.servlet.HttpServletDispatcher.service(HttpServletDispatcher.java:56)

at org.jboss.resteasy.plugins.server.servlet.HttpServletDispatcher.service(HttpServletDispatcher.java:51)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:791)

at io.undertow.servlet.handlers.ServletHandler.handleRequest(ServletHandler.java:74)

at io.undertow.servlet.handlers.FilterHandler$FilterChainImpl.doFilter(FilterHandler.java:129)

at org.keycloak.services.filters.KeycloakSessionServletFilter.doFilter(KeycloakSessionServletFilter.java:90)

at io.undertow.servlet.core.ManagedFilter.doFilter(ManagedFilter.java:61)

at io.undertow.servlet.handlers.FilterHandler$FilterChainImpl.doFilter(FilterHandler.java:131)

at io.undertow.servlet.handlers.FilterHandler.handleRequest(FilterHandler.java:84)

at io.undertow.servlet.handlers.security.ServletSecurityRoleHandler.handleRequest(ServletSecurityRoleHandler.java:62)

at io.undertow.servlet.handlers.ServletChain$1.handleRequest(ServletChain.java:68)

at io.undertow.servlet.handlers.ServletDispatchingHandler.handleRequest(ServletDispatchingHandler.java:36)

at org.wildfly.extension.undertow.security.SecurityContextAssociationHandler.handleRequest(SecurityContextAssociationHandler.java:78)

at io.undertow.server.handlers.PredicateHandler.handleRequest(PredicateHandler.java:43)

at io.undertow.servlet.handlers.security.SSLInformationAssociationHandler.handleRequest(SSLInformationAssociationHandler.java:132)

at io.undertow.servlet.handlers.security.ServletAuthenticationCallHandler.handleRequest(ServletAuthenticationCallHandler.java:57)

at io.undertow.server.handlers.PredicateHandler.handleRequest(PredicateHandler.java:43)

at io.undertow.security.handlers.AbstractConfidentialityHandler.handleRequest(AbstractConfidentialityHandler.java:46)

at io.undertow.servlet.handlers.security.ServletConfidentialityConstraintHandler.handleRequest(ServletConfidentialityConstraintHandler.java:64)

at io.undertow.security.handlers.AuthenticationMechanismsHandler.handleRequest(AuthenticationMechanismsHandler.java:60)

at io.undertow.servlet.handlers.security.CachedAuthenticatedSessionHandler.handleRequest(CachedAuthenticatedSessionHandler.java:77)

at io.undertow.security.handlers.NotificationReceiverHandler.handleRequest(NotificationReceiverHandler.java:50)

at io.undertow.security.handlers.AbstractSecurityContextAssociationHandler.handleRequest(AbstractSecurityContextAssociationHandler.java:43)

at io.undertow.server.handlers.PredicateHandler.handleRequest(PredicateHandler.java:43)

at org.wildfly.extension.undertow.security.jacc.JACCContextIdHandler.handleRequest(JACCContextIdHandler.java:61)

at io.undertow.server.handlers.PredicateHandler.handleRequest(PredicateHandler.java:43)

at org.wildfly.extension.undertow.deployment.GlobalRequestControllerHandler.handleRequest(GlobalRequestControllerHandler.java:68)

at io.undertow.server.handlers.PredicateHandler.handleRequest(PredicateHandler.java:43)

at io.undertow.servlet.handlers.ServletInitialHandler.handleFirstRequest(ServletInitialHandler.java:292)

at io.undertow.servlet.handlers.ServletInitialHandler.access$100(ServletInitialHandler.java:81)

at io.undertow.servlet.handlers.ServletInitialHandler$2.call(ServletInitialHandler.java:138)

at io.undertow.servlet.handlers.ServletInitialHandler$2.call(ServletInitialHandler.java:135)

at io.undertow.servlet.core.ServletRequestContextThreadSetupAction$1.call(ServletRequestContextThreadSetupAction.java:48)

at io.undertow.servlet.core.ContextClassLoaderSetupAction$1.call(ContextClassLoaderSetupAction.java:43)

at org.wildfly.extension.undertow.security.SecurityContextThreadSetupAction.lambda$create$0(SecurityContextThreadSetupAction.java:105)

at org.wildfly.extension.undertow.deployment.UndertowDeploymentInfoService$UndertowThreadSetupAction.lambda$create$0(UndertowDeploymentInfoService.java:1502)

at org.wildfly.extension.undertow.deployment.UndertowDeploymentInfoService$UndertowThreadSetupAction.lambda$create$0(UndertowDeploymentInfoService.java:1502)

at org.wildfly.extension.undertow.deployment.UndertowDeploymentInfoService$UndertowThreadSetupAction.lambda$create$0(UndertowDeploymentInfoService.java:1502)

at org.wildfly.extension.undertow.deployment.UndertowDeploymentInfoService$UndertowThreadSetupAction.lambda$create$0(UndertowDeploymentInfoService.java:1502)

at io.undertow.servlet.handlers.ServletInitialHandler.dispatchRequest(ServletInitialHandler.java:272)

at io.undertow.servlet.handlers.ServletInitialHandler.access$000(ServletInitialHandler.java:81)

at io.undertow.servlet.handlers.ServletInitialHandler$1.handleRequest(ServletInitialHandler.java:104)

at io.undertow.server.Connectors.executeRootHandler(Connectors.java:360)

at io.undertow.server.HttpServerExchange$1.run(HttpServerExchange.java:830)

at org.jboss.threads.ContextClassLoaderSavingRunnable.run(ContextClassLoaderSavingRunnable.java:35)

at org.jboss.threads.EnhancedQueueExecutor.safeRun(EnhancedQueueExecutor.java:1985)

at org.jboss.threads.EnhancedQueueExecutor$ThreadBody.doRunTask(EnhancedQueueExecutor.java:1487)

at org.jboss.threads.EnhancedQueueExecutor$ThreadBody.run(EnhancedQueueExecutor.java:1378)

at java.lang.Thread.run(Thread.java:748)

Suppressed: org.infinispan.util.logging.TraceException

at org.infinispan.interceptors.impl.SimpleAsyncInvocationStage.get(SimpleAsyncInvocationStage.java:41)

at org.infinispan.interceptors.impl.AsyncInterceptorChainImpl.invoke(AsyncInterceptorChainImpl.java:250)

... 78 more 10.52.1.204

When XtremeCloud SSO pods leave the cluster, you may get this warning:

21:03:56,159 WARN [org.infinispan.CLUSTER] (remote-thread--p12-t5) [Context=loginFailures] ISPN000316: Lost data because of graceful leaver 10.52.3.21(site-id=site1, rack-id=null, machine-id=null), entering degraded mode

21:03:56,160 INFO [org.infinispan.CLUSTER] (remote-thread--p12-t5) [Context=loginFailures] ISPN100011: Entering availability mode DEGRADED_MODE, topology id 6

21:03:56,169 WARN [org.infinispan.CLUSTER] (remote-thread--p12-t7) [Context=offlineClientSessions] ISPN000316: Lost data because of graceful leaver 10.52.3.21(site-id=site1, rack-id=null, machine-id=null), entering degraded mode

21:03:56,169 INFO [org.infinispan.CLUSTER] (remote-thread--p12-t7) [Context=offlineClientSessions] ISPN100011: Entering availability mode DEGRADED_MODE, topology id 6

21:03:56,258 WARN [org.infinispan.CLUSTER] (remote-thread--p12-t8) [Context=clientSessions] ISPN000316: Lost data because of graceful leaver 10.52.3.21(site-id=site1, rack-id=null, machine-id=null), entering degraded mode

21:03:56,258 INFO [org.infinispan.CLUSTER] (remote-thread--p12-t8) [Context=clientSessions] ISPN100011: Entering availability mode DEGRADED_MODE, topology id 6

21:03:56,274 WARN [org.infinispan.CLUSTER] (remote-thread--p12-t9) [Context=offlineSessions] ISPN000316: Lost data because of graceful leaver 10.52.3.21(site-id=site1, rack-id=null, machine-id=null), entering degraded mode

21:03:56,275 INFO [org.infinispan.CLUSTER] (remote-thread--p12-t9) [Context=offlineSessions] ISPN100011: Entering availability mode DEGRADED_MODE, topology id 6

Let’s examine why this message is logged.