XtremeCloud Data Grid-web

Concept of Operations

Replication Tiers

The XtremeCloud Data Grid consists of three (3) distinct tiers of bi-directional replication (BDR) between Cloud Service Providers (CSP) or between a CSP and an on-premise private cloud.

The first tier is the cluster of relational databases, at each Cloud Service Provider (CSP) that underlies our XtremeCloud applications. We refer to this tier as XtremeCloud Data Grid-db. The supported databases are shown in the XtremeCloud Applications Certification Matrix.

The second data replication tier is the web cache tier, XtremeCloud Data Grid-web.

XtremeCloud Data Grid-web Cross-Cloud or Cross-Region Replication

XtremeCloud SSO uses XtremeCloud Data Grid-web for the replication of web cache data between, or within, the Cloud Service Providers (CSP). We use the Remote Client-Server Mode which provides a managed, distributed, and clusterable data grid server. This is depicted in the Enterprise Deployment Diagram.

Note: XtremeCloud SSO Version 3.0.1 uses Infinispan 9.3.1 for client purposes and calls the remote Kubernetes service for XtremeCloud Data Grid-web Version 3.0.1, which is based on Infinispan 9.3.3. For a complete list compatibilities, please refer to the Certification Matrix.

Let’s look at a typical scenario and discuss the interactions between XtremeCloud SSO and XtremeCloud Data Grid-web.

In a typical scenario, an end user’s browser sends HTTPS request to a front-end load balancer service. In XtremeCloud applications this is either an NGINX Kubernete Ingress Controller (KIC) or an Aspen Mesh (Istio) Gateway.

The NGINX KIC, as an example, terminates SSL and forwards unencrypted HTTP requests to the underlying XtremeCloud SSO Kubernetes pods, which are spread amongst multiple CSPs. Our certified Global Services Load Balancers (GSLB) provide sticky sessions, which means that HTTPS requests from one user’s browser is always forwarded to the same XtremeCloud SSO instance in the same Cloud Service Provider (CSP) or on-premise data center.

There are also other HTTPS requests, which are sent from client applications to the GLSB. Those HTTPS requests are backchannel requests. They are not seen by the end user’s browser and will not be part of a sticky session between a user and the GSLB. This means that the GSLB will forward the particular HTTPS request to any XtremeCloud SSO instance in any CSP. This poses a challenge, since some OpenID Connect (OIDC) or SAML flows require multiple HTTPS requests from both the end-user and the application itself. Since we can’t rely on sticky sessions, it means that some data must be be replicated at the database level between CSPs. During subsequent HTTPS requests during a particular flow, this data will be available. XtremeCloud Data Grid-db is the way that data is reliably and consistently replicated between clouds.

Authentication Sessions

There is separate XtremeCloud Data Grid-web cache named authenticationSessions that is used to save data during authentication of a particular user. This cache usually involves just the end-user’s browser and XtremeCloud SSO Kubernetes pod, not the protected application. Therefore, we rely on sticky sessions and authenticationSessions and this cache content doesn’t need to be replicated between the CSPs.

Action Tokens

Action Tokens are used typically for scenarios when a user needs to confirm some actions asynchronously via an email exchange. For example, during a forgotten password flow. The actionTokens XtremeCloud Data Grid-web cache is used to track metadata about actionTokens (for example, which actionToken was already used, so it can’t be reused a subsequent time). The actionTokens are replicated between the Cloud Service Providers (CSP).

Use of XtremeCloud Data Grid-db

XtremeCloud SSO uses XtremeCloud Data Grid-db to persist metadata about realms, clients, users, and much more. In the cross-cloud setup, we ensure that both XtremeCloud Data Grid-db clusters talk to each over over a high-speed low-latency interconnect.

When XtremeCloud SSO services in our designated Site 1 (Google Cloud Platform (GCP)) persists any data to the underlying relational database and a transaction is committed, that data is replicated to the XtremeCloud Data Grid-db on the designated Site 2 (Microsoft Azure).

Caching and Invalidation of Persistent Data

XtremeCloud SSO uses XtremeCloud Data Grid-web to cache persistent data to avoid many unnecessary round-trips to the database. Caching is used for significant performance gains, however this caching presents an additional challenge. When an XtremeCloud SSO Kubernetes pod updates any data, all XtremeCloud SSO Kubernetes pods, in both CSPs need to be made aware of it, so that they invalidate particular data from their caches. XtremeCloud SSO uses local XtremeCloud Data Grid-web caches named realms, users, and authorization to cache persistent data.

We use a separate cache named work, which is replicated between the CSPs. The work cache itself doesn’t cache any real data. It is used just for sending invalidation messages between cluster nodes and CSPs. For example, when some data is updated (say user “ssmith” is updated), the particular XtremeCloud SSO Kubernetes pod sends the invalidation message to all other clustered XtremeCloud SSO pods in the same Kubernetes cluster and also to the other Kubernetes cluster at the remote CSP. Every XtremeCloud SSO pod at the remote CSP then invalidates affected data from their local cache once it receives the invalidation message. This invalidation, of course, results in a cache repopulation from the underlying database in the XtremeCloud Data Grid-db.

User Sessions

There are XtremeCloud Data Grid-web caches, named sessions and offlineSessions, that are replicated between CSPs. These replicated caches are used to save data about user sessions, which are valid for the life of one user’s browser session. The caches need to deal with the HTTPS requests from the end user to and from an application. As described above, sticky session can’t be reliably used, but we still want to ensure that subsequent HTTPS requests can see the latest data. Therefore, this data is replicated.

Protection Against A Brute Force Attack

The loginFailures cache is used to track data about failed logins (i.e., how many times user ‘ssmith’ entered the wrong password on a login screen). To have an accurate count of login failures, cross-cloud replication is performed.

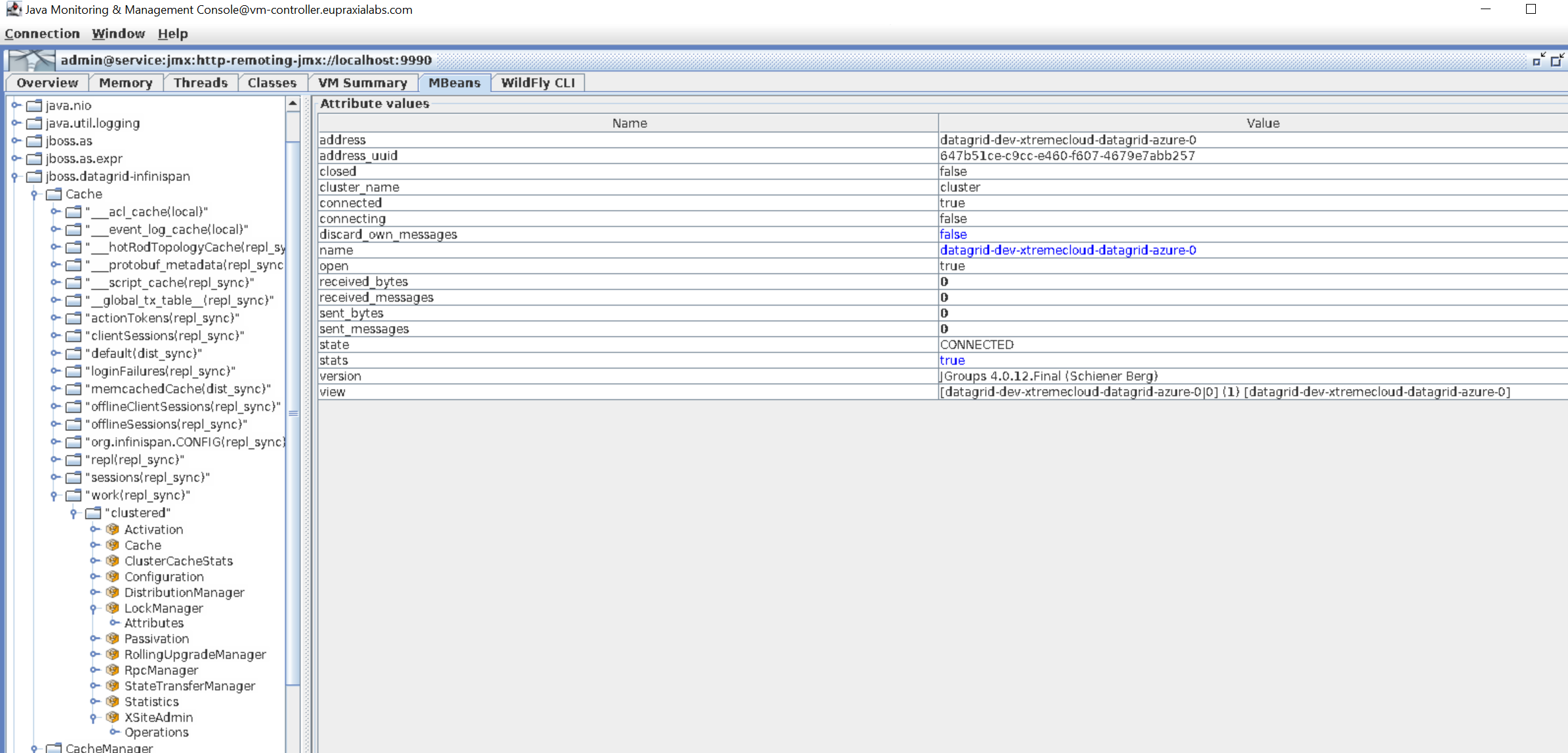

Here is a view of the replicated caches in JConsole.

For more information about setting up JConsole to monitor and manage the XtremeCloud Data Grid-web, take a look at this Eupraxia Labs blog.

Communication Essentials

Clearly, behind the scenes, there are multiple separate XtremeCloud Data Grid-web clusters here. Every XtremeCloud SSO pod is in a cluster with the other XtremeCloud SSO pods within same CSP, but not with the XtremeCloud SSO pods within a Kubernetes Cluster at a different CSP. An XtremeCloud SSO pod does not communicate directly with the XtremeCloud SSO Kubernetes pods at another CSP. XtremeCloud SSO pods make calls to an external XtremeCloud Data Grid-web pod (itself in a cluster) for communication between CSPs. This is done through a binary protocol, known as HotRod.

The XtremeCloud Data Grid-web caches associated with XtremeCloud SSO side needs to be configured with the remoteStore attribute, to ensure that data is saved to the remote cache, with the high-performing HotRod protocol. There is a separate XtremeCloud Data Grid-web cluster between XtremeCloud Data Grid-web pod, so the data saved on the XtremeCloud Data Grid-web pod on Site 1 is replicated to the XtremeCloud Data Grid-web pod on Site 2.

Receiver XtremeCloud Data Grid-web Kubernetes pods, which are load-balanced by an NGINX Kubernetes Ingress Controller then notifies XtremeCloud SSO Kubernetes pods in it’s cluster, through Client Listeners, which is a feature of the HotRod protocol. XtremeCloud SSO Kubernetes pods on Site 2 then update their XtremeCloud Data Grid-web caches and a particular userSession update is available on XtremeCloud SSO pods on Site 2, as well.

Here is an excerpt from the clustered configuration file in the cross-cloud Helm Chart for XtremeCloud Data Grid-web at Site 2:

Note: The clustering configuration file is injected into the initial start-up of the XtremeCloud Data Grid-web pods using a Kubernetes ConfigMap. This ConfigMap is deployed with the XtremeCloud Data Grid-web Helm Chart

<subsystem xmlns="urn:infinispan:server:jgroups:9.3">

<channels default="cluster">

<channel name="cluster"/>

<channel name="xsite" stack="tcp"/>

</channels>

<stacks default="${jboss.default.jgroups.stack:kubernetes}">

<stack name="tcp">

<transport type="TCP" socket-binding="jgroups-tcp-relay">

<property name="log_discard_msgs">false</property>

<property name="external_addr">40.121.193.215</property> <!-- Site 2 IP address -->

</transport>

<protocol type="TCPPING">

<property name="initial_hosts">35.225.2.57[7601],40.121.193.215[7601]</property> <!-- Site 1 (GCP/GKE) and Site 2 (Azure/AKS) IP addresses and ports -->

<property name="port_range">0</property>

<property name="ergonomics">false</property>

</protocol>

<protocol type="MERGE3">

<property name="min_interval">10000</property>

<property name="max_interval">30000</property>

</protocol>

Of particular note here is that the Site 2 external address is the external IP address of the Kubernetes load balancer at Microsoft Azure (Site 2). The values of the IP addresses and port numbers are actually populated by the Helm Chart during the XtremeCloud Data Grid-web deployment.

Looking at Helm deployment done with a Codefresh CI/CD deployment:

[centos@vm-controller ~]$ helm ls

NAME REVISION UPDATED STATUS CHART APP VERSION NAMESPACE

cert-manager 1 Fri Aug 9 12:02:04 2019 DEPLOYED cert-manager-v0.7.1 v0.7.1 cert-manager

datagrid-dev 29 Thu Aug 8 08:01:18 2019 DEPLOYED xtremecloud-datagrid-azure-3.0.0 9.3.3 dev

sso-dev 2 Sat Aug 10 12:37:10 2019 DEPLOYED xtremecloud-sso-azure-3.0.2 4.8.3 dev

xtremecloud-nginx 1 Sun Aug 11 08:33:14 2019 DEPLOYED nginx-ingress-1.14.0 0.25.0 kube-system

Let’s looks at the Kubernetes services at Azure Kubernetes Service (AKS):

[centos@vm-controller ~]$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

cm-acme-http-solver-xsps5 NodePort 10.0.14.185 <none> 8089:30152/TCP 39d

datagrid-dev-xtremecloud-datagrid-azure ClusterIP 10.0.76.96 <none> 9990/TCP 62d

sso-dev-xtremecloud-sso-azure ClusterIP 10.0.167.236 <none> 8080/TCP 39d

xcdg-restful-server ClusterIP 10.0.171.160 <none> 8080/TCP 65d

xcdg-server-hotrod ClusterIP 10.0.19.212 <none> 11222/TCP 65d

xtremecloud-datagrid-azure LoadBalancer 10.0.49.145 40.121.193.215 7601:31244/TCP 62d

Now, let’s describe the xtremecloud-datagrid-azure service:

[centos@vm-controller ~]$ kubectl get svc xtremecloud-datagrid-azure -o yaml

apiVersion: v1

kind: Service

metadata:

annotations:

kubernetes.io/ingress.class: nginx

creationTimestamp: "2019-07-17T21:43:23Z"

labels:

app: xtremecloud-datagrid-azure

app.kubernetes.io/instance: datagrid-dev

app.kubernetes.io/managed-by: Tiller

app.kubernetes.io/name: xtremecloud-datagrid-azure

helm.sh/chart: xtremecloud-datagrid-azure-3.0.0

name: xtremecloud-datagrid-azure

namespace: dev

resourceVersion: "33957689"

selfLink: /api/v1/namespaces/dev/services/xtremecloud-datagrid-azure

uid: e6b46fab-a8db-11e9-bb6b-96c605ceb55a

spec:

clusterIP: 10.0.49.145

externalTrafficPolicy: Cluster

ports:

- nodePort: 31244

port: 7601

protocol: TCP

targetPort: 7601

selector:

app: xtremecloud-datagrid-azure

sessionAffinity: None

type: LoadBalancer

status:

loadBalancer:

ingress:

- ip: 40.121.193.215

The NGINX KIC (annotation kubernetes.io/ingress.class: nginx) will load balance the inbound Port 7601 replication traffic to the cluster of XtremeCloud Data Grid-web pods.

Partitioning Recovery

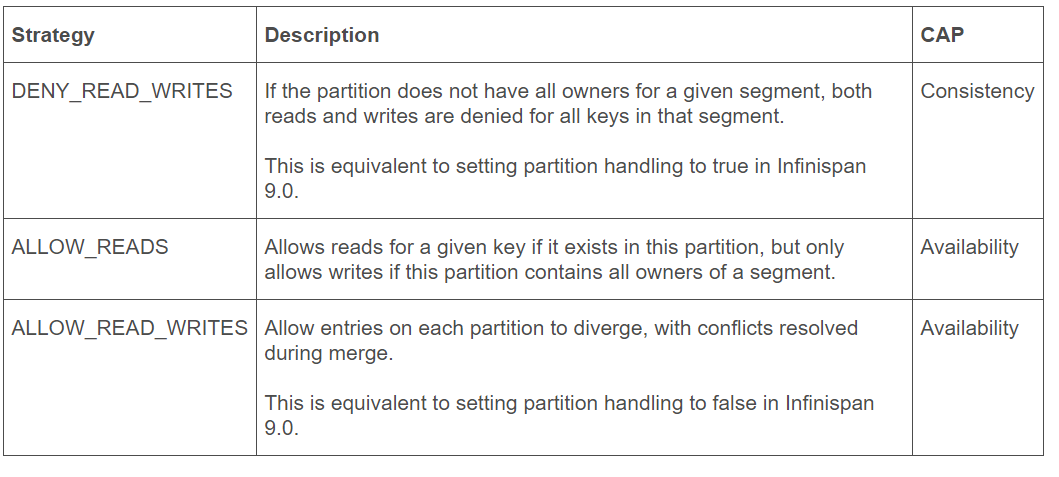

As the underlying open source component to XtremeCloud Data Grid-web, Infinispan 9.1.0.Final was overhauled to modify the behavior and configuration of partition handling in distributed and replicated caches. Partition handling is no longer simply enabled/disabled. Instead a partition strategy is configured. This allows for more fine-grained control of a cache’s behavior when a split-brain scenario occurs. Furthermore, a ConflictManager component was created so that conflicts on cache entries can be automatically resolved on-demand by users and/or automatically during partition merges .

A partition handling strategy determines what operations can be performed on a cache when a split-brain event has occurred. Ultimately, in terms of Brewer’s CAP theorem, the configured strategy determines whether the cache’s availability or consistency is sacrificed in the presence of partition(s).

Let’s take a look at the merge policies available in XtremeCloud Data Grid-web:

Specific issues with handling a split-brain in the XtremeCloud Data Grid-web, is addressed in this troubleshooting guide.

Cloud Service Provider (CSP) Session Stickiness

To reiterate a point, all XtremeCloud applications all share one common trait. Any web client that connects to a specific Cloud Service Provider (CSP), remains on that CSP for the duration of the http session. We handle that through sticky sessions on both Cloudflare global load balancing and F5 Cloud Services DNS (Global Load Balancing Services). Any web (http) clients of XtremeCloud applications, on the same CSP, see immediate and consistent data in the application. If a user did log out and the subsequent ‘https’ connection was to the other CSP, it is possible that the data has not yet been replicated to the second CSP.

It is the challenge of managing mission-critical replicated data, between Cloud Service Providers (CSP), to ensure that the end-user’s expectations are met with just-in-time (JIT) data over widely distributed applications like XtremeCloud Single Sign-On (SSO).

We configure, we test, we tune, and we achieve.

XtremeCloud Data Grid Web Cache Containers with Multiple Network Interfaces

A unique characteristic of the next generation (ng) of XtremeCloud application pods is the use of multiple network interfaces. The separate management and data planes will even further optimize performance within each Cloud Service Provider (CSP).

Installation and Configuration

To begin the Kubernetes Cluster deployment of XtremeCloud Data Grid-web, to multiple Cloud Service Providers (CSP) refer to this Quick Start Guide.